Modern Rendering Patterns

Building on the web has never been more powerful than it is in 2022, and which patterns make the most sense for your use case can give your application huge performance benefits.

Now I'm not here to talk bad about certain patterns and praise others, it's just here to cover some common patterns we see nowadays and their tradeoffs for specific use cases!

Ideally, we just want to develop, preview, ship, impress users, and change the world with our revolutionary ideas.

Unfortunately, in most cases, it doesn't quite end up this way. We might accidentally introduce really long build times, frustrate our users because the website loads real slow or has a jumpy UI, and get with a huge server bill at the end of the month for a project that didn't even gain traction, as the website never got a great SEO due to bad performance.

Although our idea might have changed the world, the way that we architected our project prevented this from ever happening!

So... what can we do about this?

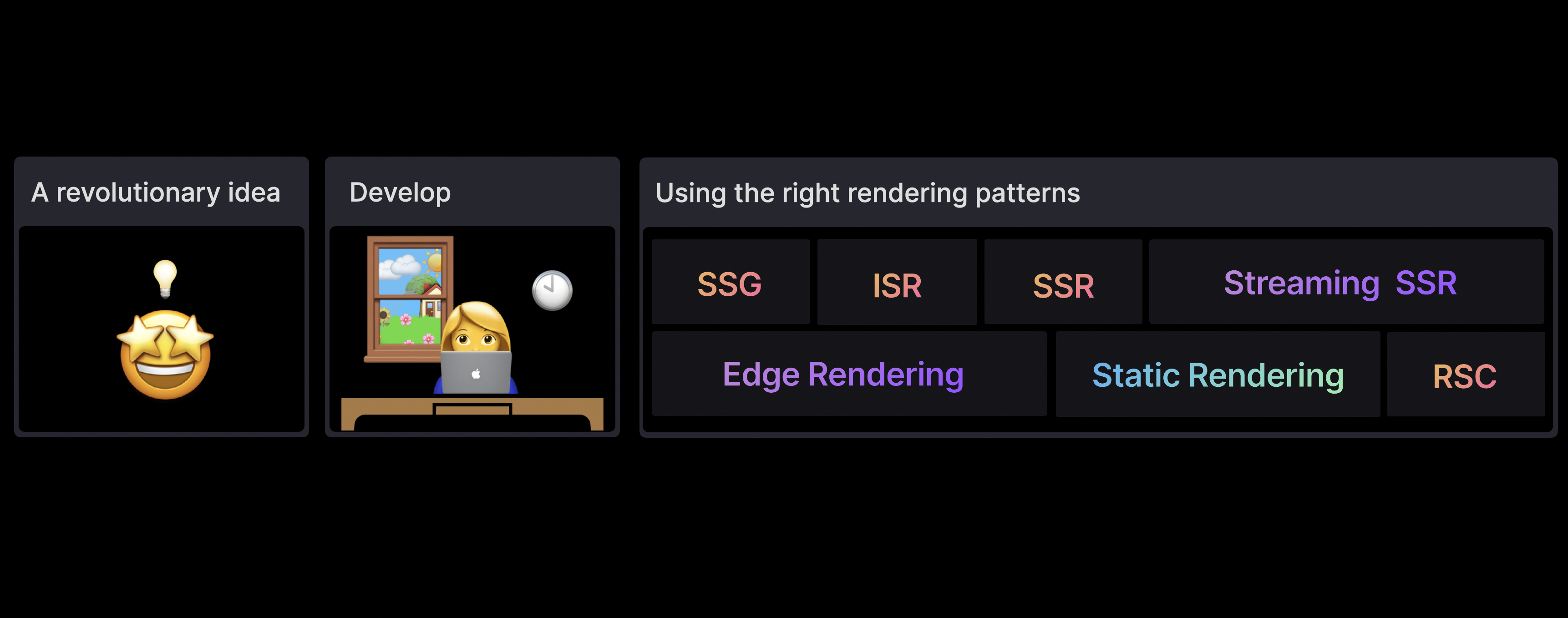

We have to start implementing the right rendering patterns in our applications! Knowing how to apply these techniques can massively improve your app's performance, giving you a great product to share with the world.

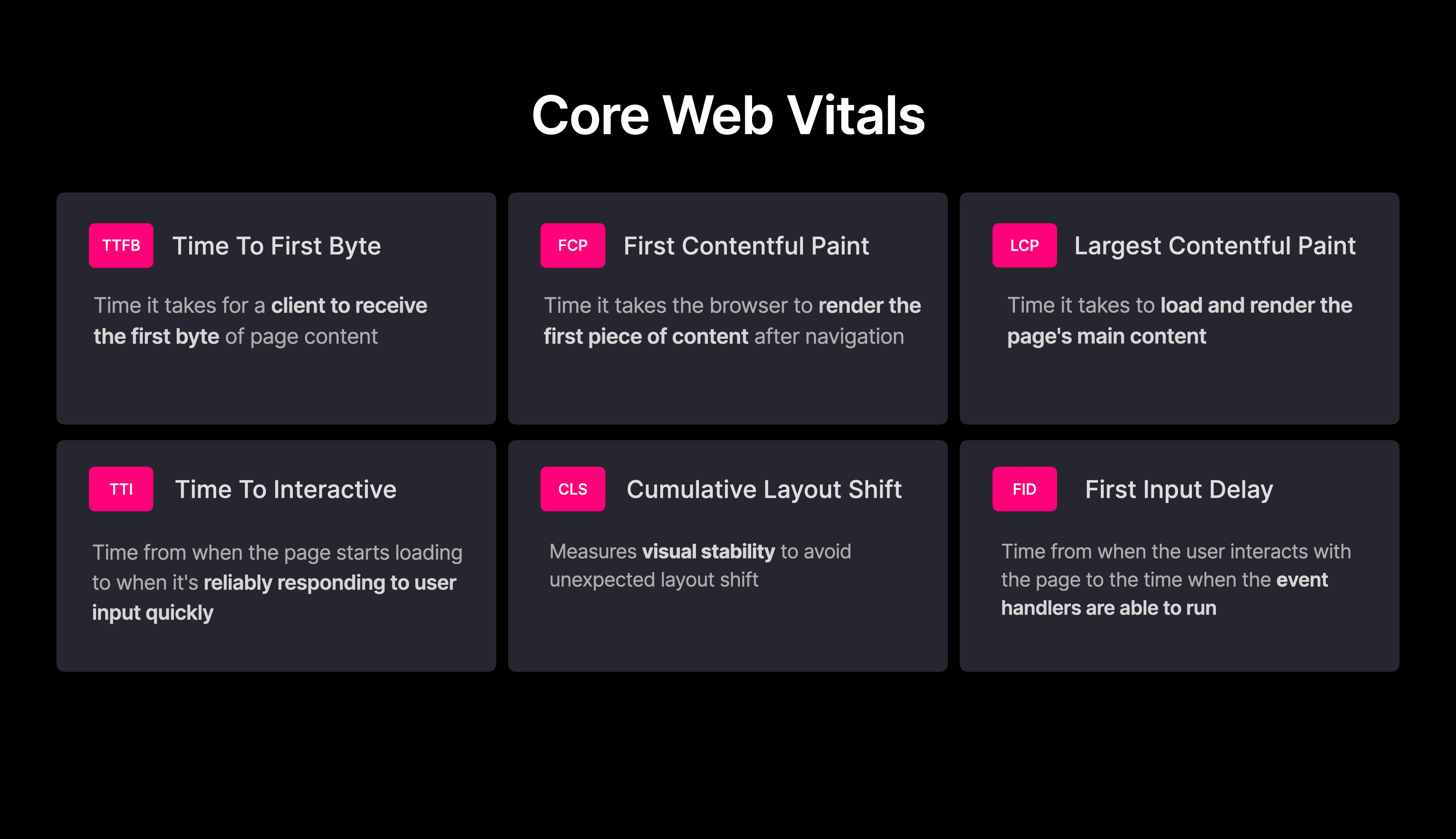

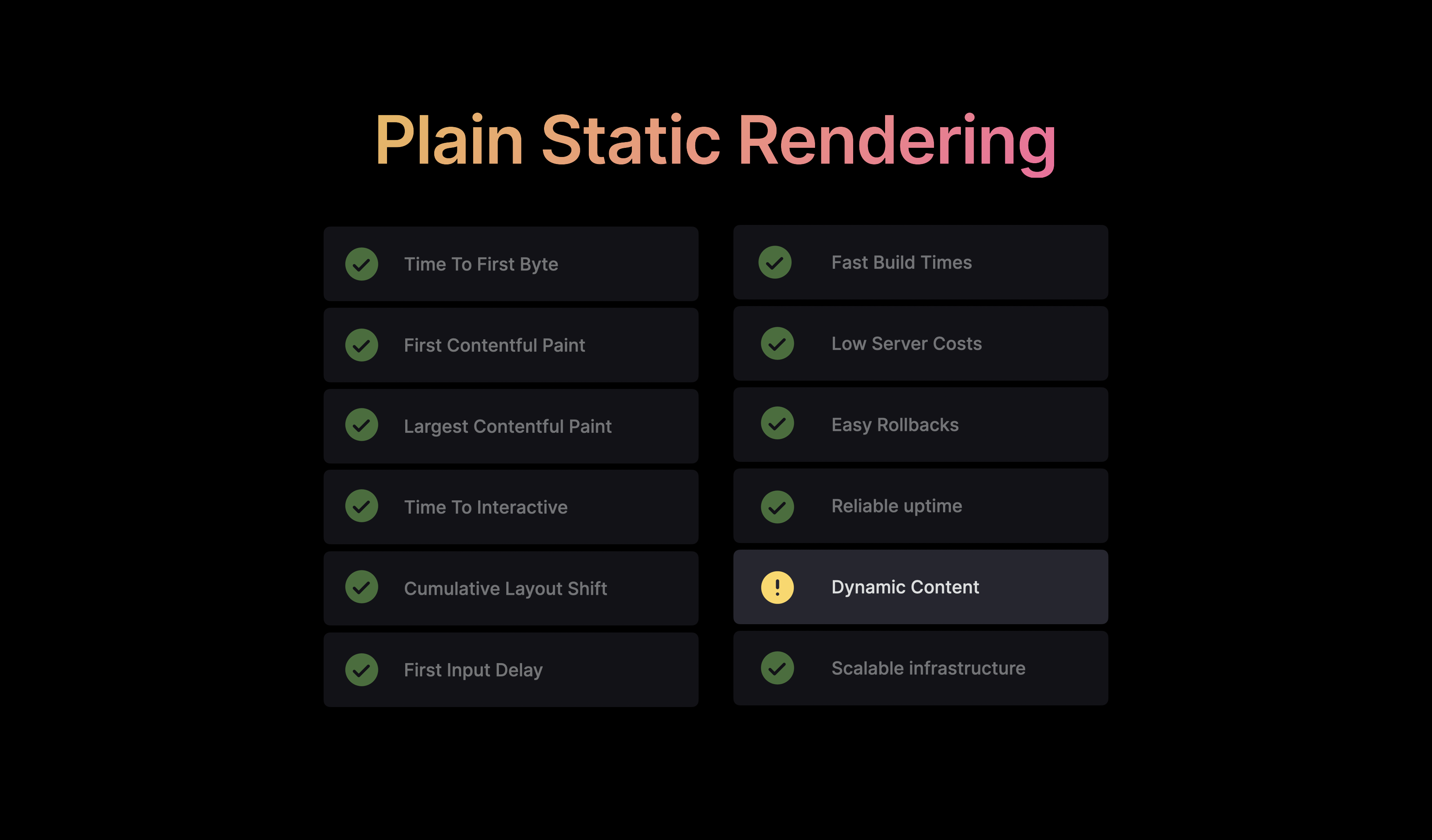

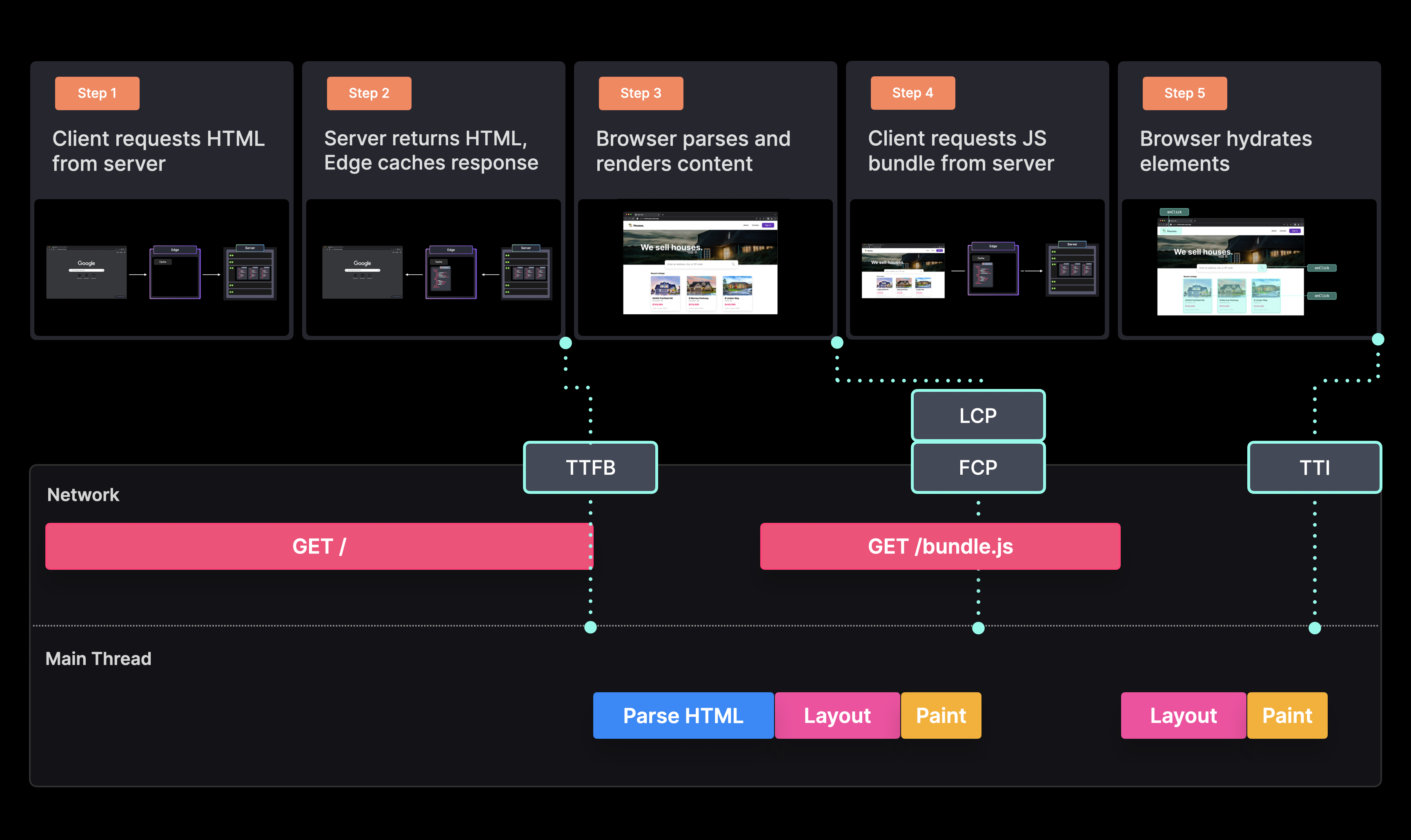

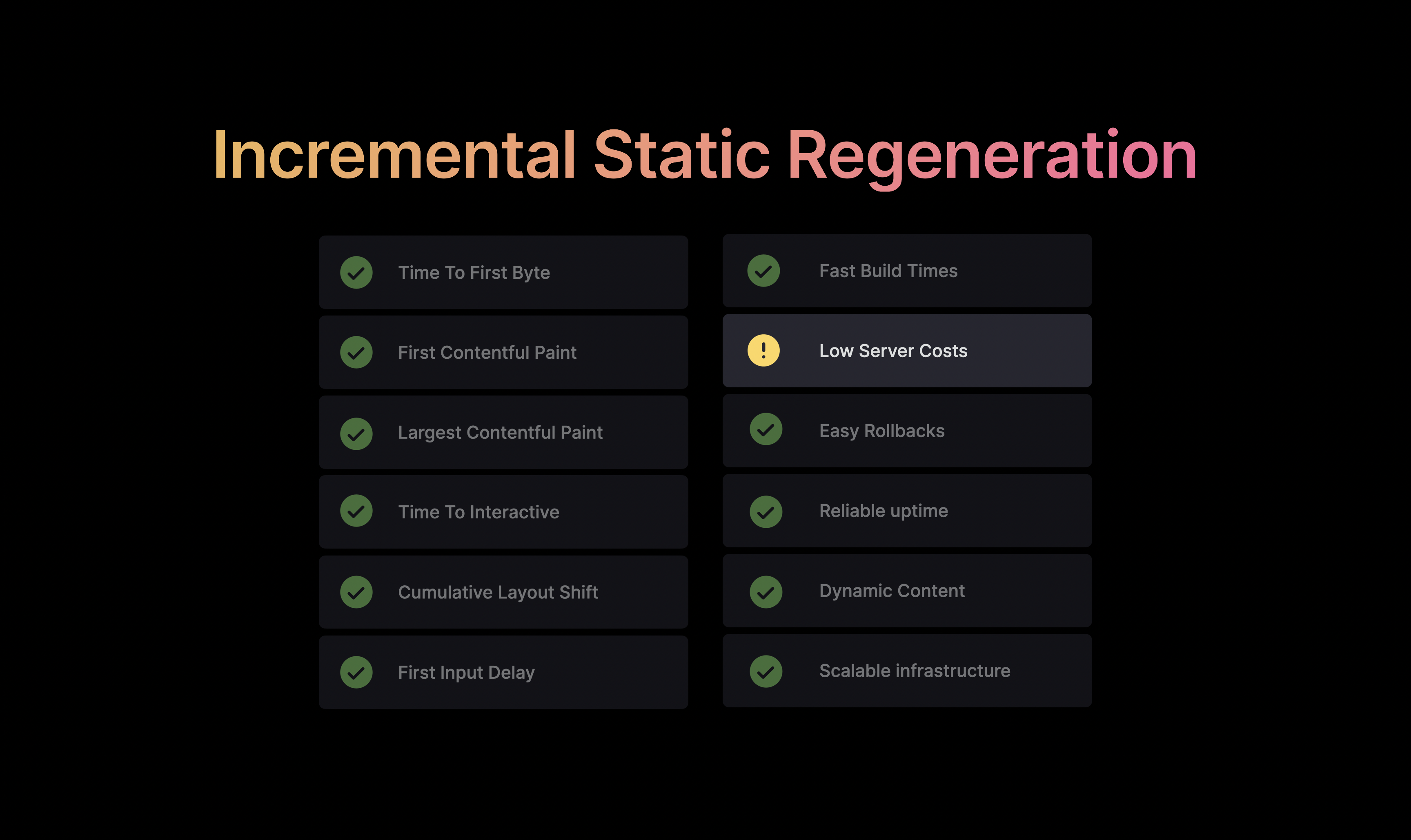

Usually when we talk about web performance, we think about optimizing for the Core Web Vitals.

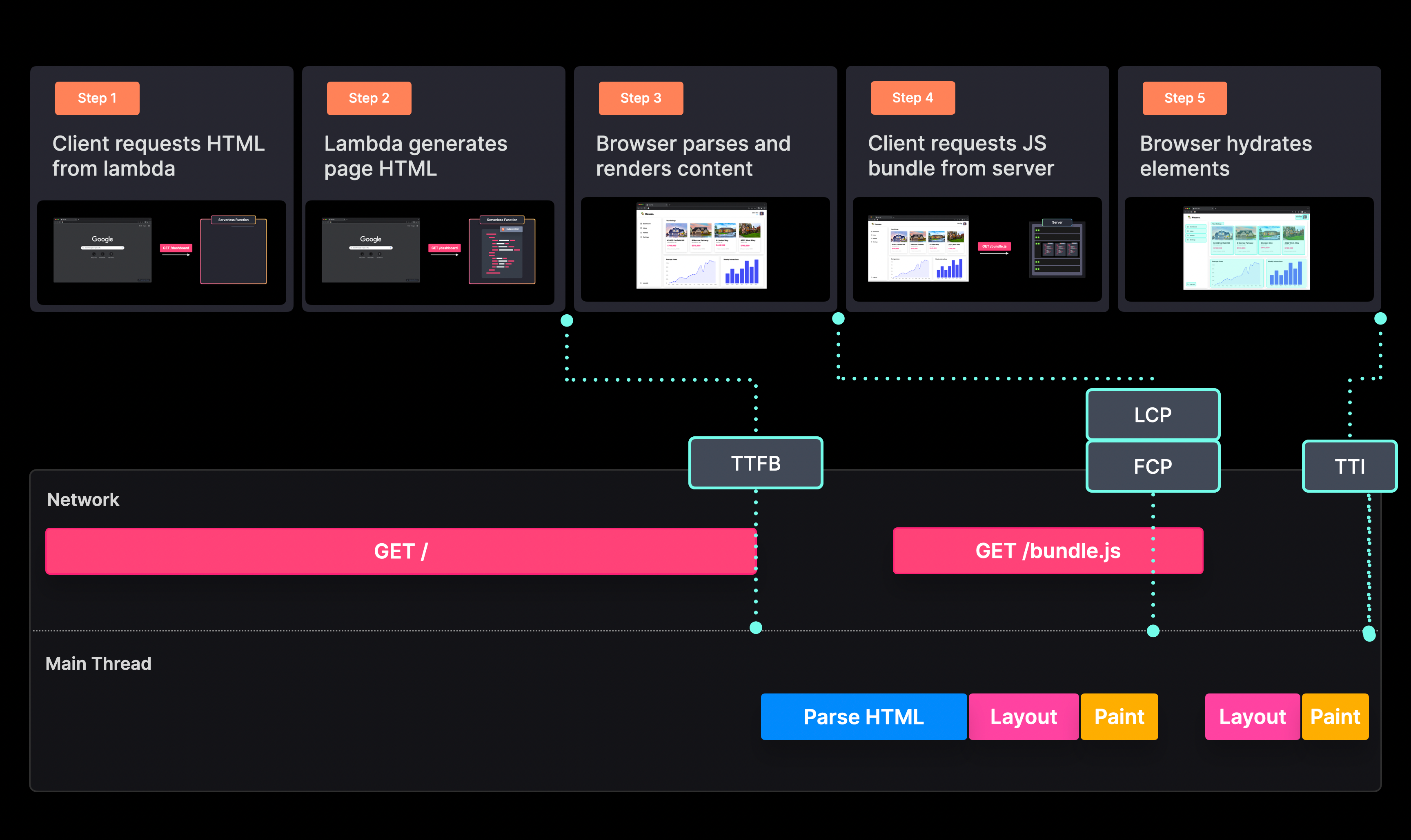

These are a set of useful measurements that tell us how well our site performs, for example the TTFB measures the time it takes for the server to respond with the initial data, and the FCP measures how quickly your users see useful content on their screen for the first time, and so on, I won't go over all of these right now.

If we want to have a website with great user experience and optimal SEO, we have to start caring about optimizing for these measurements.

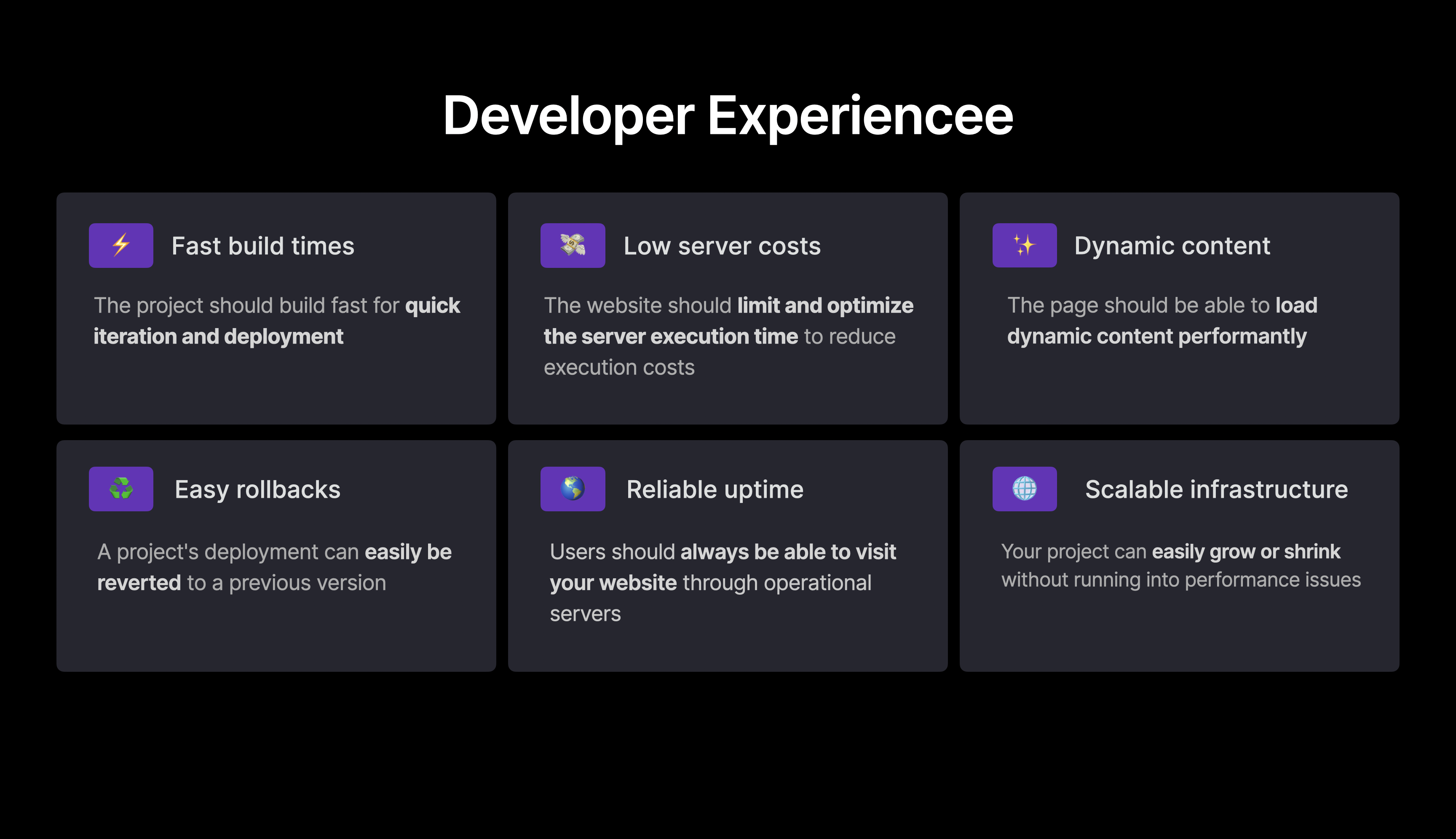

But that’s not all, we also want to have the best developer experience!

We want a project with short build times to be able to quickly iterate, low server costs so we don’t go bankrupt, show dynamic content in a performant way if needed, and generally no scalability issues further down the line when our website goes viral.

Although this seems like a lot to keep in mind, using the right rendering technique can really help you out here to create a great user and developer experience.

Now that we know why rendering patterns are imporant, how do you know which ones make the most sense for your website? Or if you're using Vercel, you can even choose this on a per-page basis, so which one makes the most sense for that specific page?

Spending one minute on Google or on tech twitter can already feel pretty overwhelming with all new terms, abbreviations and the latest trendy ways to render data, and people’s opinion on them.

All patterns out there have their use case, there is not one pattern that’s better than the others, as long as you know their tradeoffs.

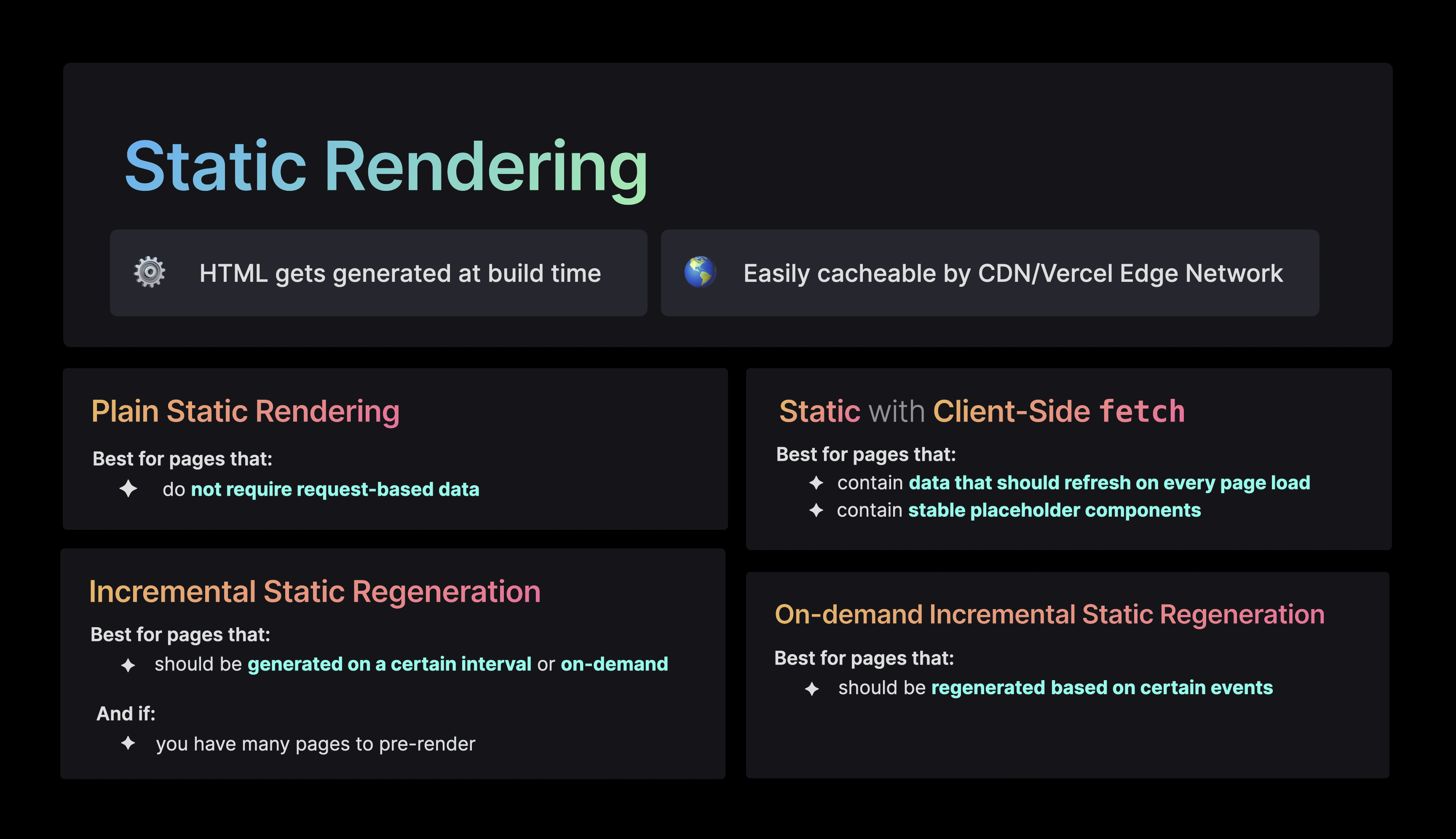

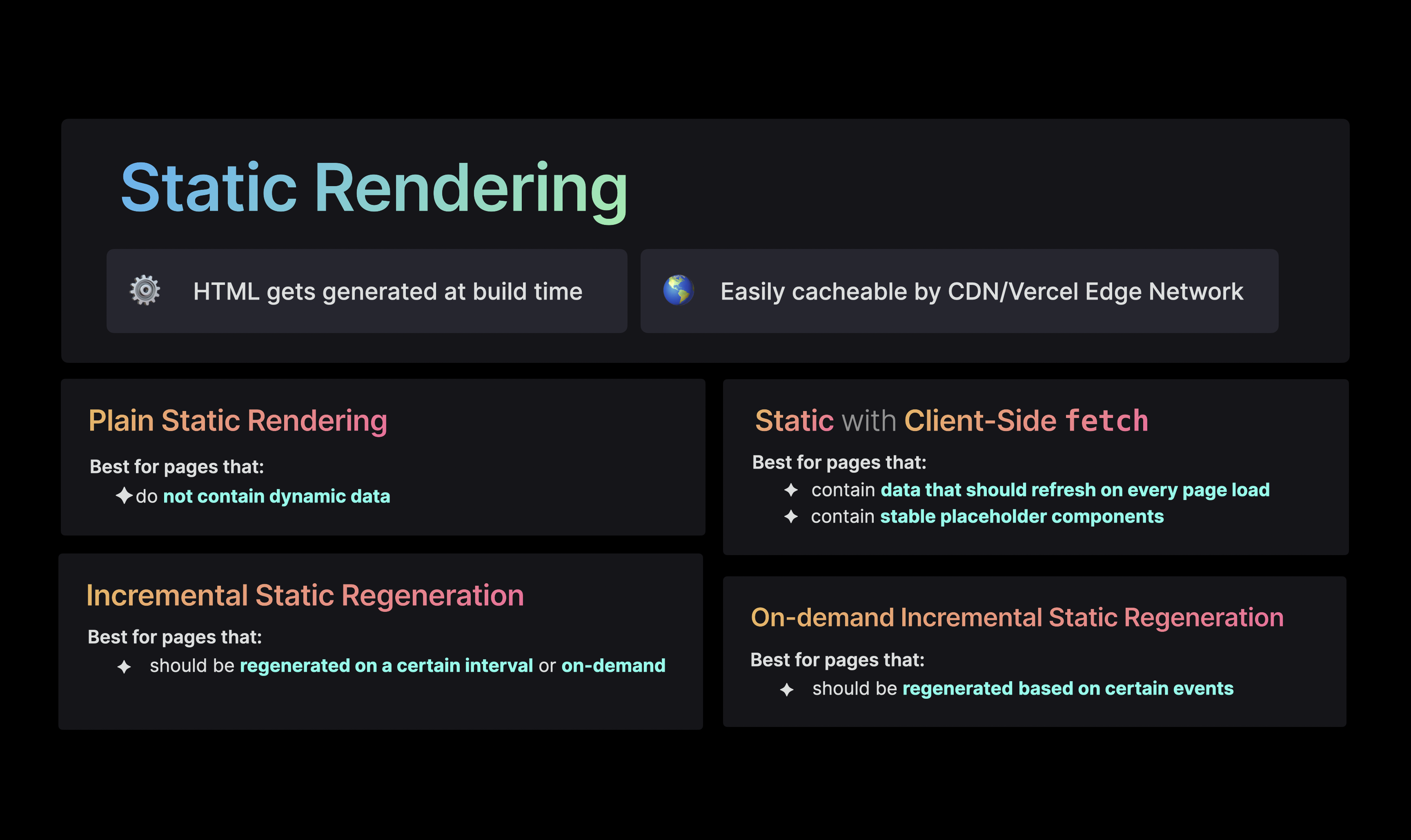

First, let’s talk about a pattern that may seem very basic and straight-forward, namely Static Rendering.

Although it might seem simple, there are many variations to static rendering to serve a lot of different use cases. Static rendering is popular pattern as it comes with so many performance benefits.

With static rendering, the entire HTML gets generated at build time.

Since the files are static, they’re easily cacheable by a CDN, or on Vercel by the Edge Network. This makes it possible to get extremely fast responses, since the CDN can quickly return the cached file instead of having to request it from the origin server.

There are many variations to static rendering to serve a lot of different use cases.

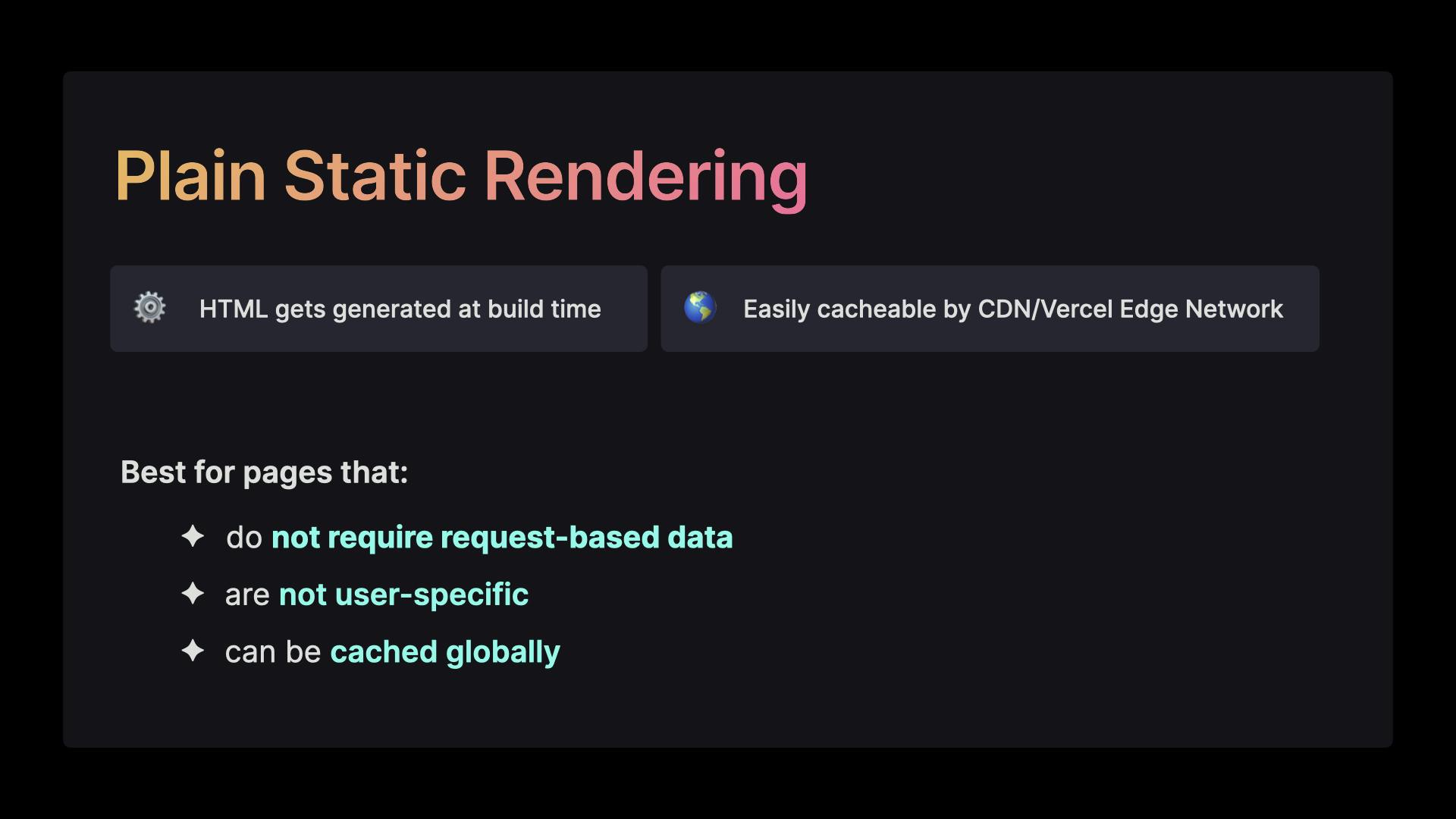

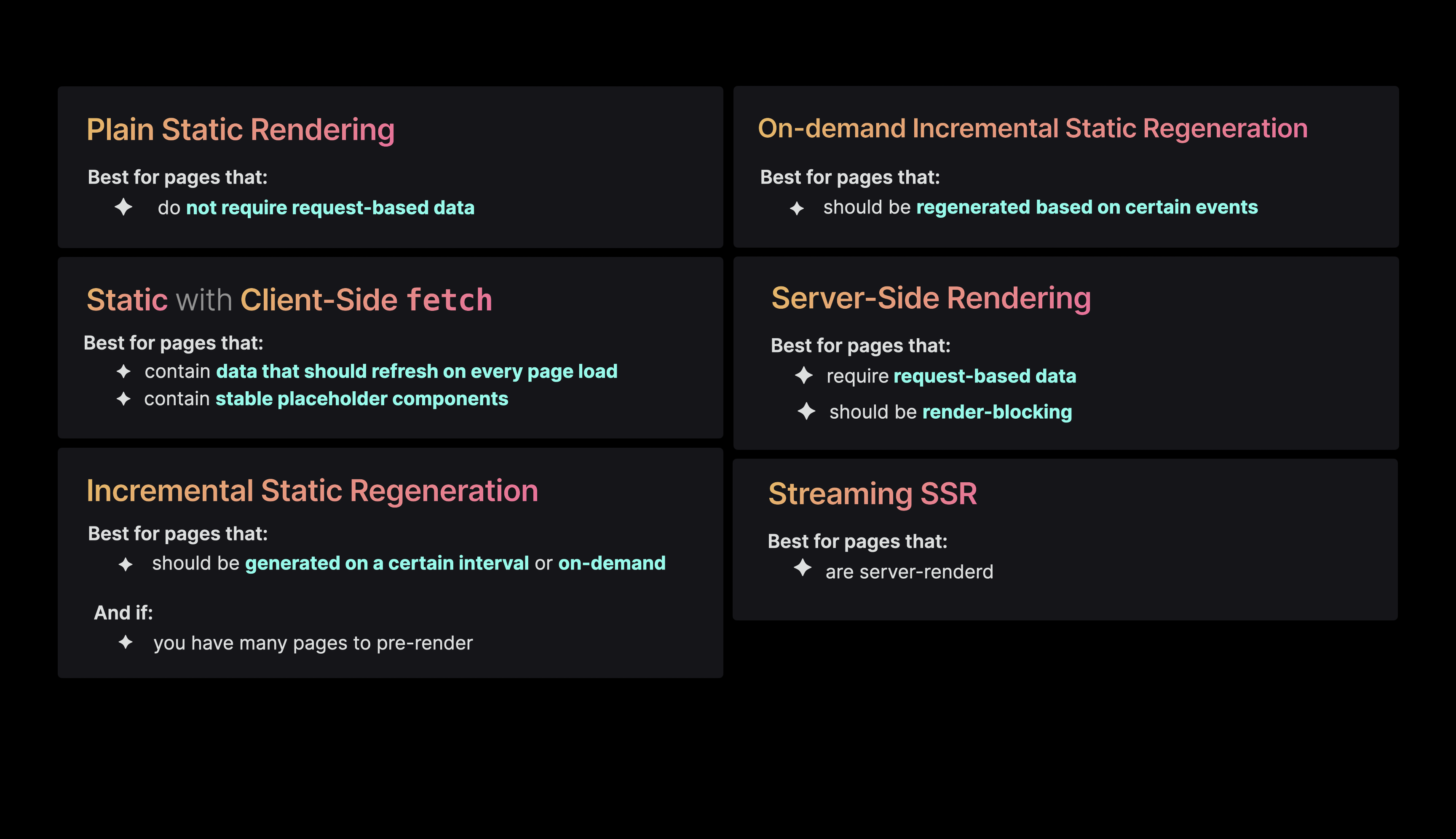

First up is Plain Static Rendering (I'm just calling it plain static rendering since I couldn't really think of a better term lol, not sure if there is one).

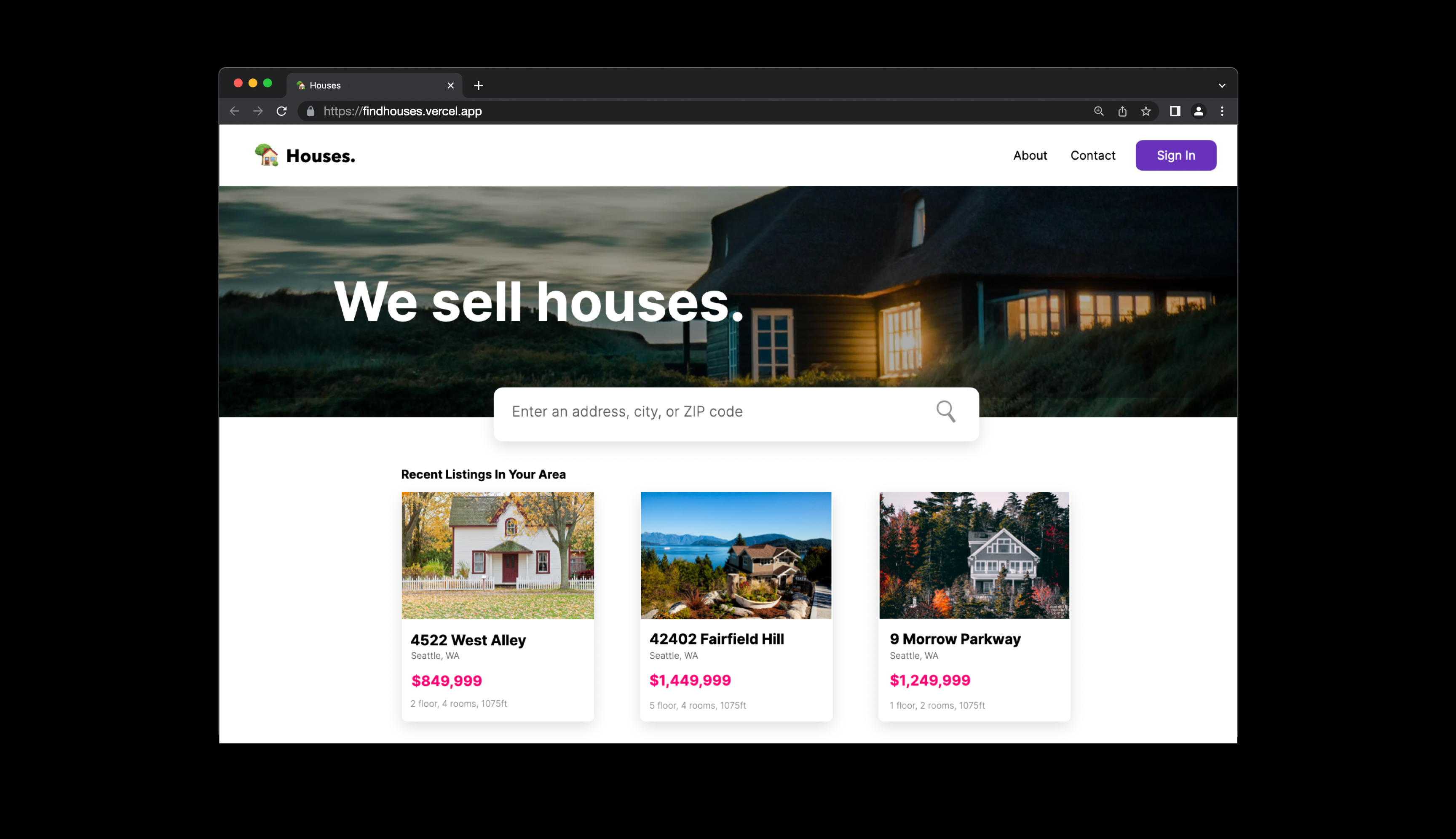

Plain Static Rendering is a technique we can use for very non-dynamic pages, such as the landing page of this fake demo real estate website.

This page is absolutely perfect for static rendering as it just shows the same data for everyone globally. We aren’t fetching any data or showing any personalized components.

When we deploy this, in this case to Vercel, the HTML gets generated for the pages, and persisted to static storage.

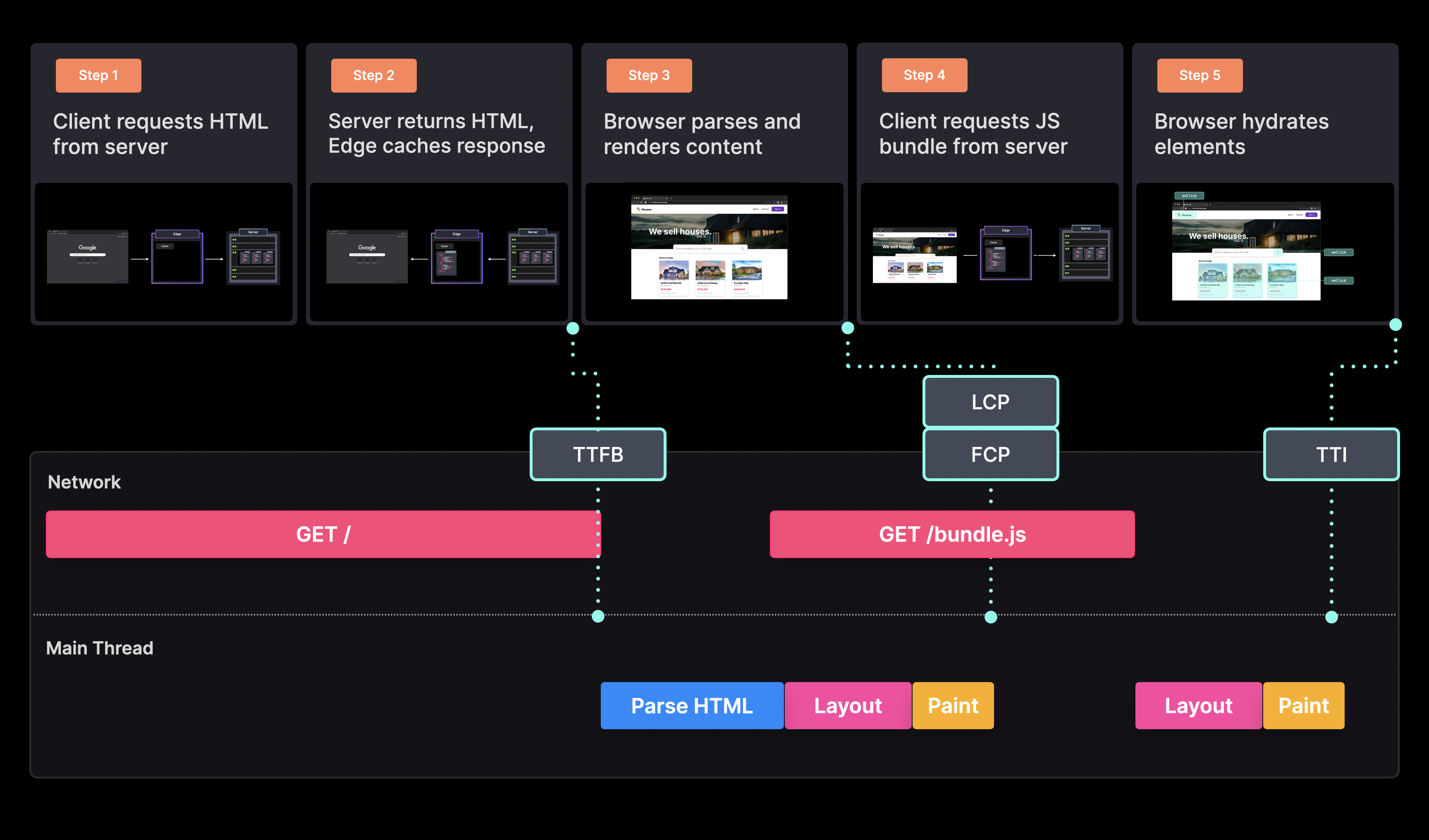

When a user visits the website, a request is made to the server.

The server can quickly return the pre-generated HTML to the client, and as it gets returned, the Edge location closest to the user caches the response.

The browser renders the HTML, and requests a JavaScript bundle to hydrate the page. (Hydration is the process of binding event handlers to the HTML elements to make them interactive)

From a performance perspective, this is a great approach!

We can have an extremely quick TTFB since the server already contains the pre-generated HTML, and the browser can quickly render something to the screen, resulting in a fast FCP and LCP. We also don’t have to worry about layout shift since we’re not dynamically loading components.

Plain Static Rendering is great for your Core Web Vitals, especially in combination with a CDN. However, in most cases, we'll probably want to show some dynamic data.

Say that instead of showing some services here, we actually want to show some recent listings. We could just hard-code that data right in the page, but in reality we’re probably using some data provider to get our listings from.

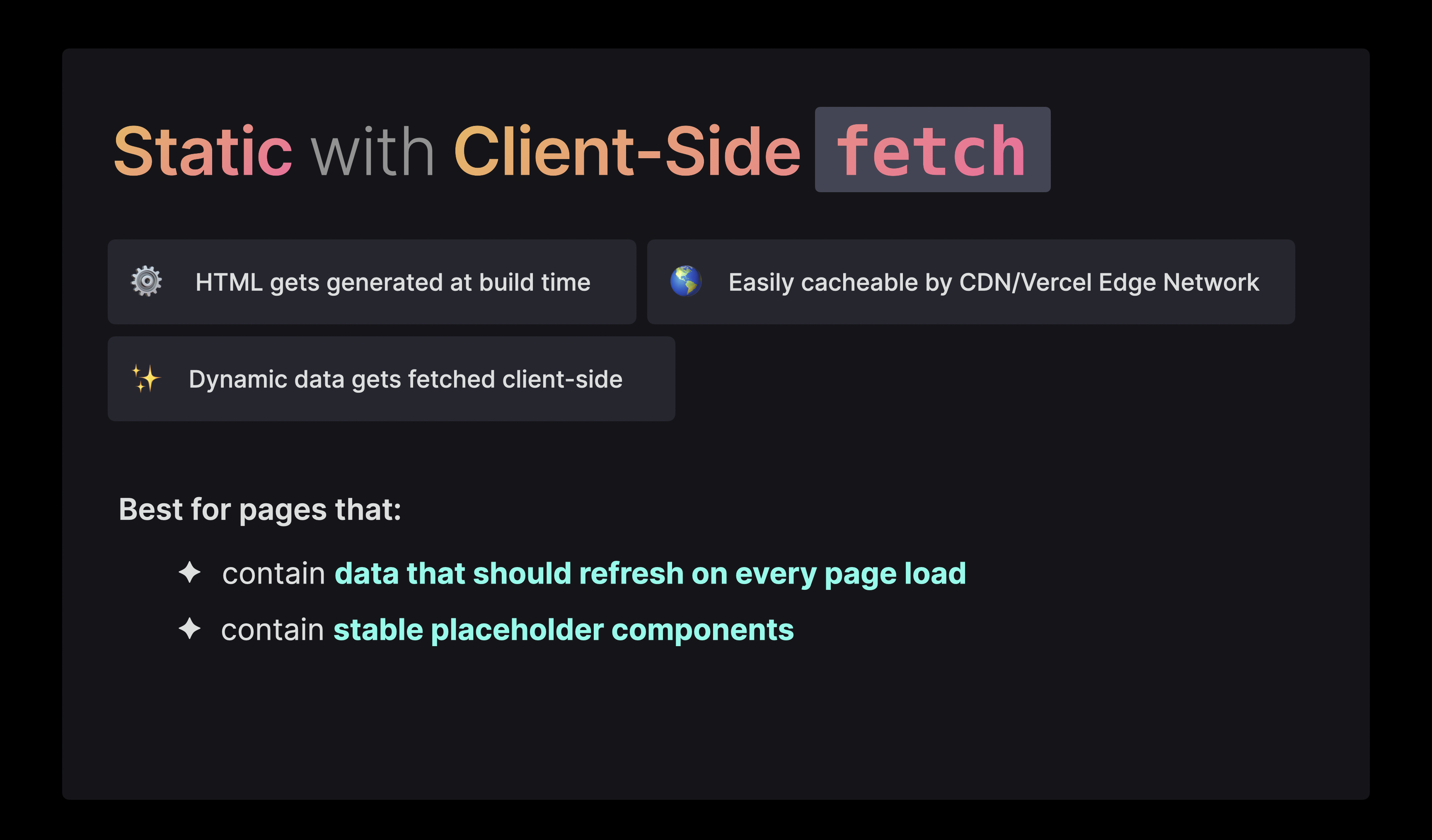

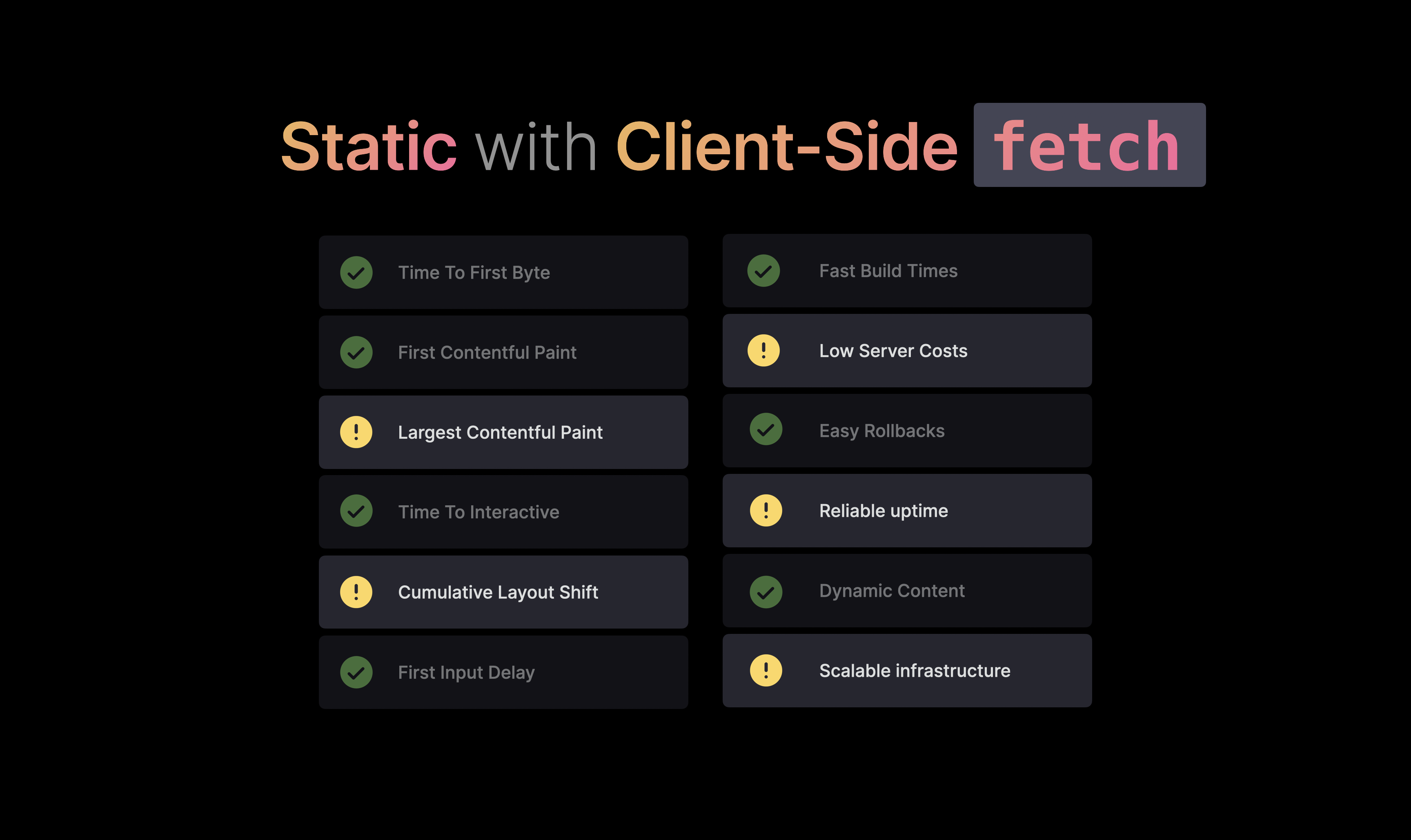

One approach we can take here, is to use Static Rendering with Client-Side fetch. This is usually a great pattern for pages that contain data that should be updated on every request.

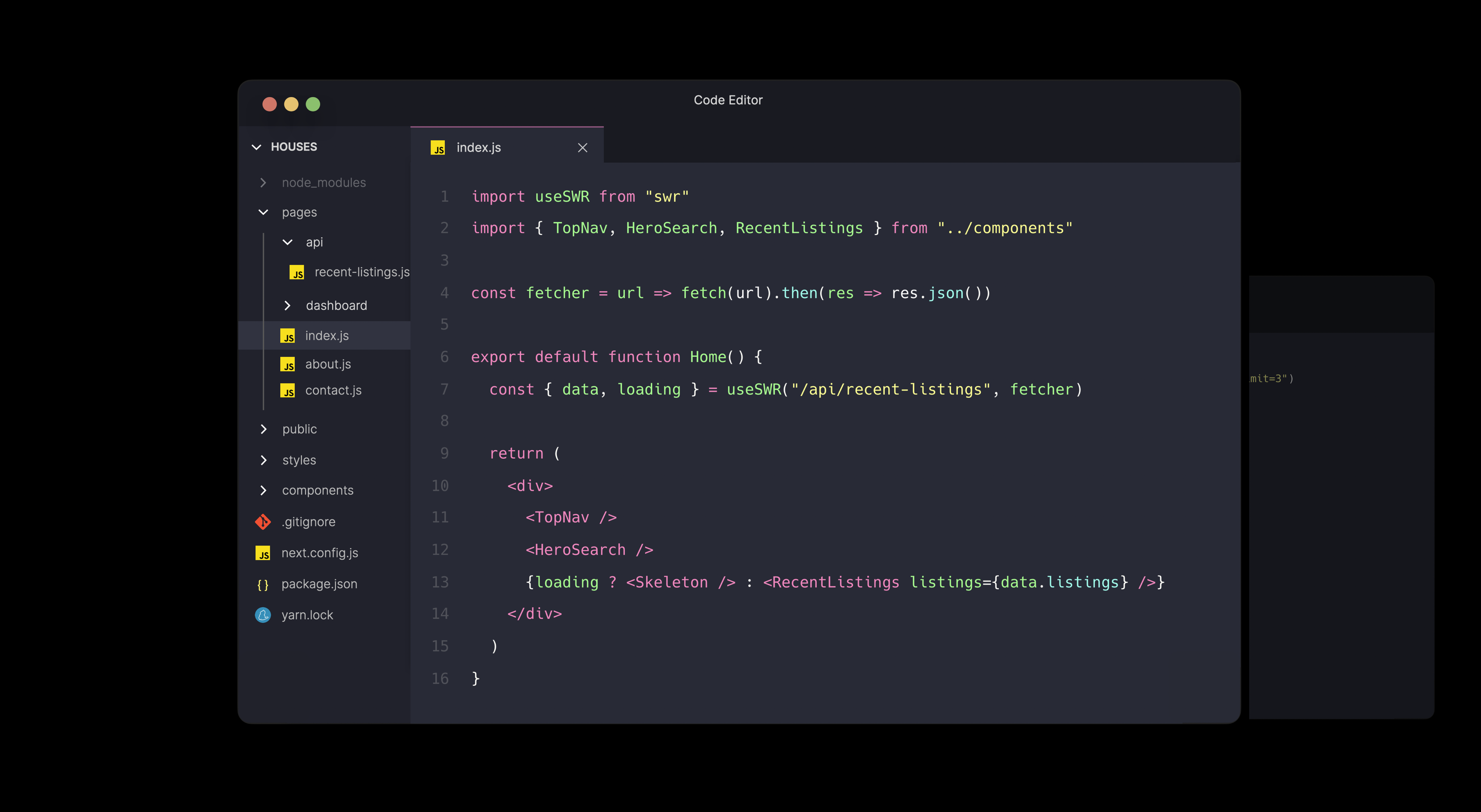

We can statically render the website like we did before, and fetch the data using for example SWR on the client as soon as the page has loaded. While the data is being fetched, we’ll show a skeleton component.

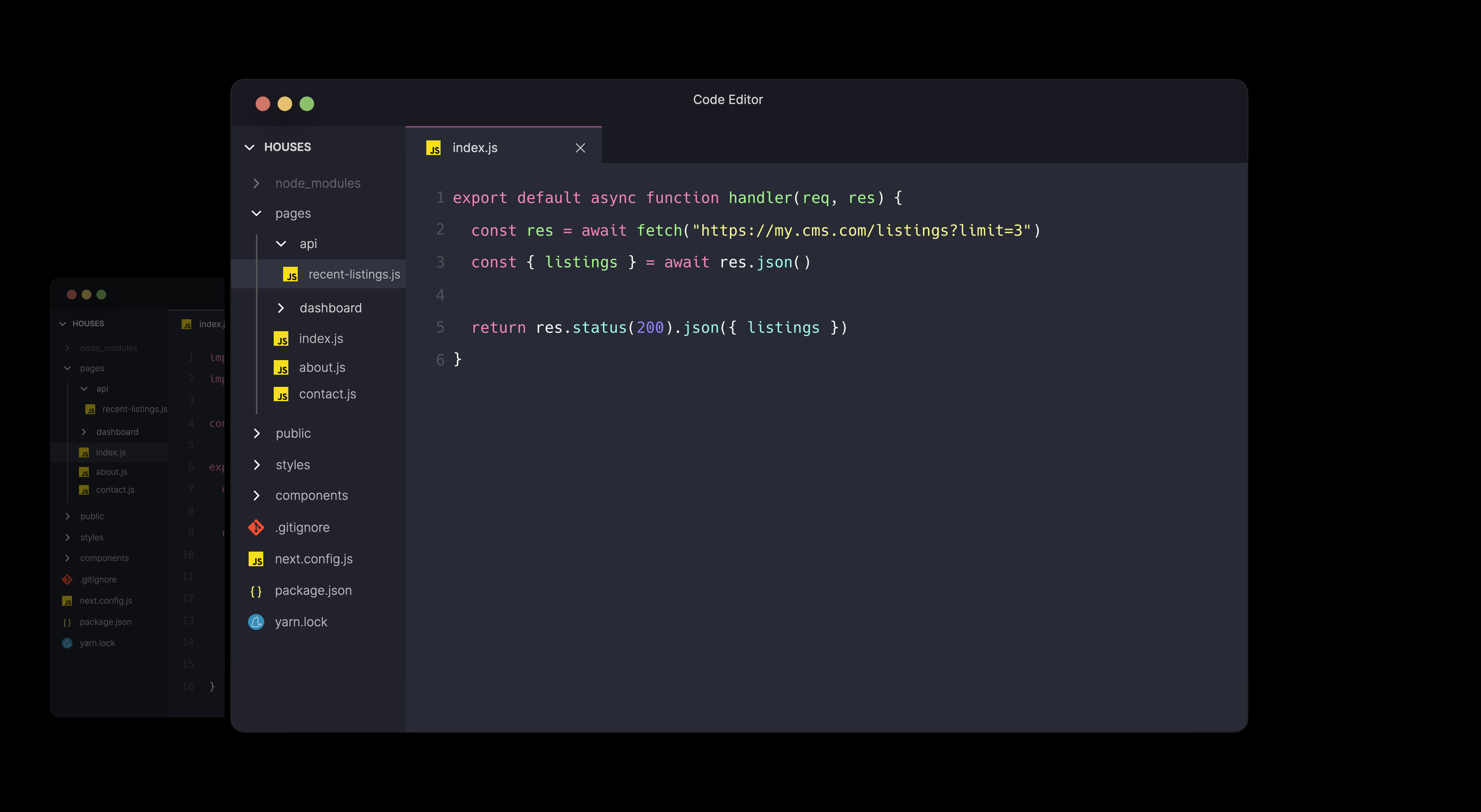

We also need create a custom API route to fetch the data from. Within the API route, we retrieve the data from our CMS, and return this data.

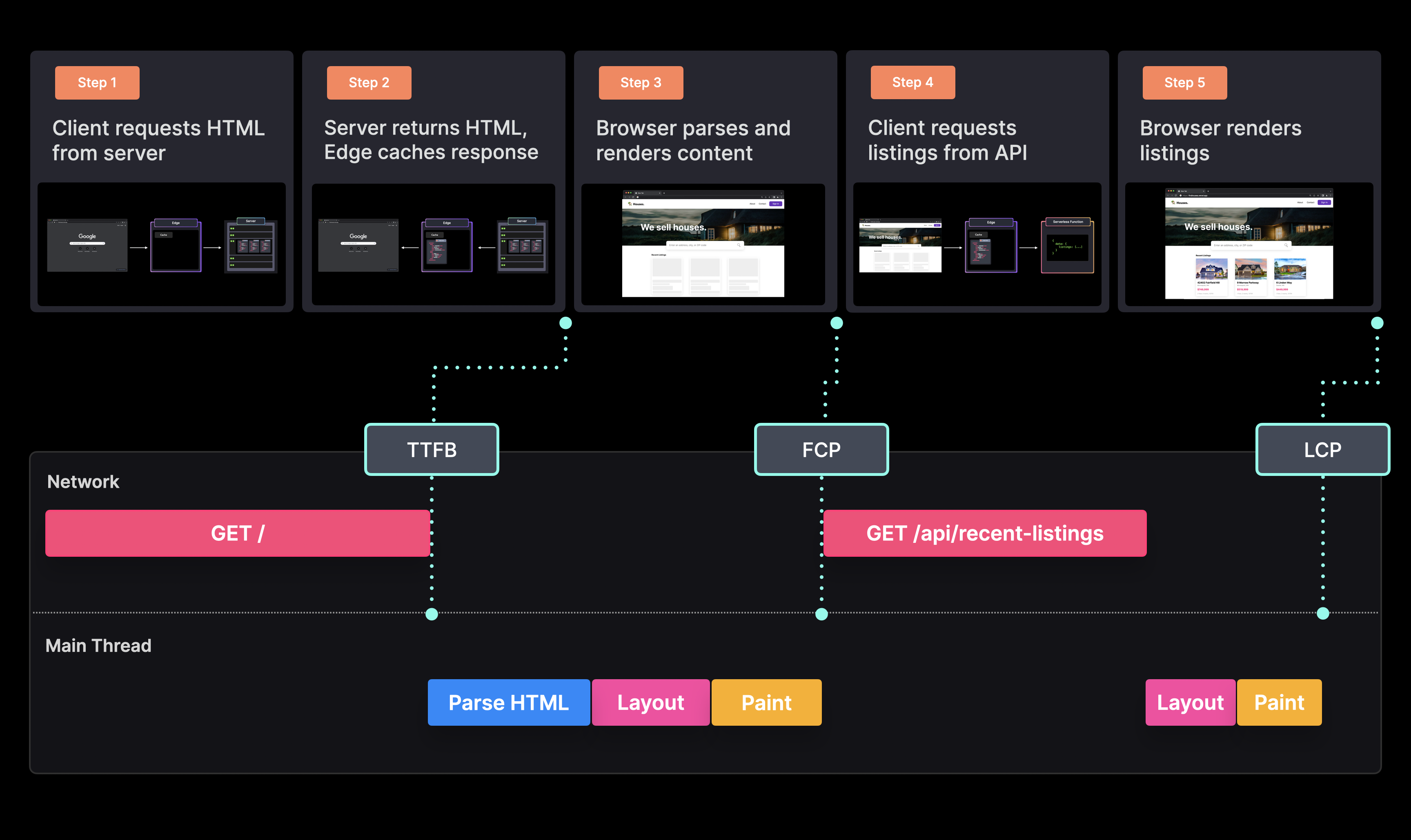

Now when the user request the page, the server again returns with the pre-generated HTML file. The user initially sees the skeleton UI since the data hasn't been fetched yet.

After parsing and rendering the initial content, the client fetches the data from the API route, and after getting a successful response, we can finally show the listings. (For simplicity, I'm not including the hydration call in this example)

Although using Static Rendering with Client-Side fetch gives us a good TTFB and FCP, the LCP is not as good as it used to be, since we have to wait for the API route to return data in order to show the listings.

We might also run into layout shifts, which can happen if our skeleton component doesn't match with the content that eventually gets rendered after receiving the data.

Since we're calling the API route on every page request, we can also run into higher server costs as we pay for every execution.

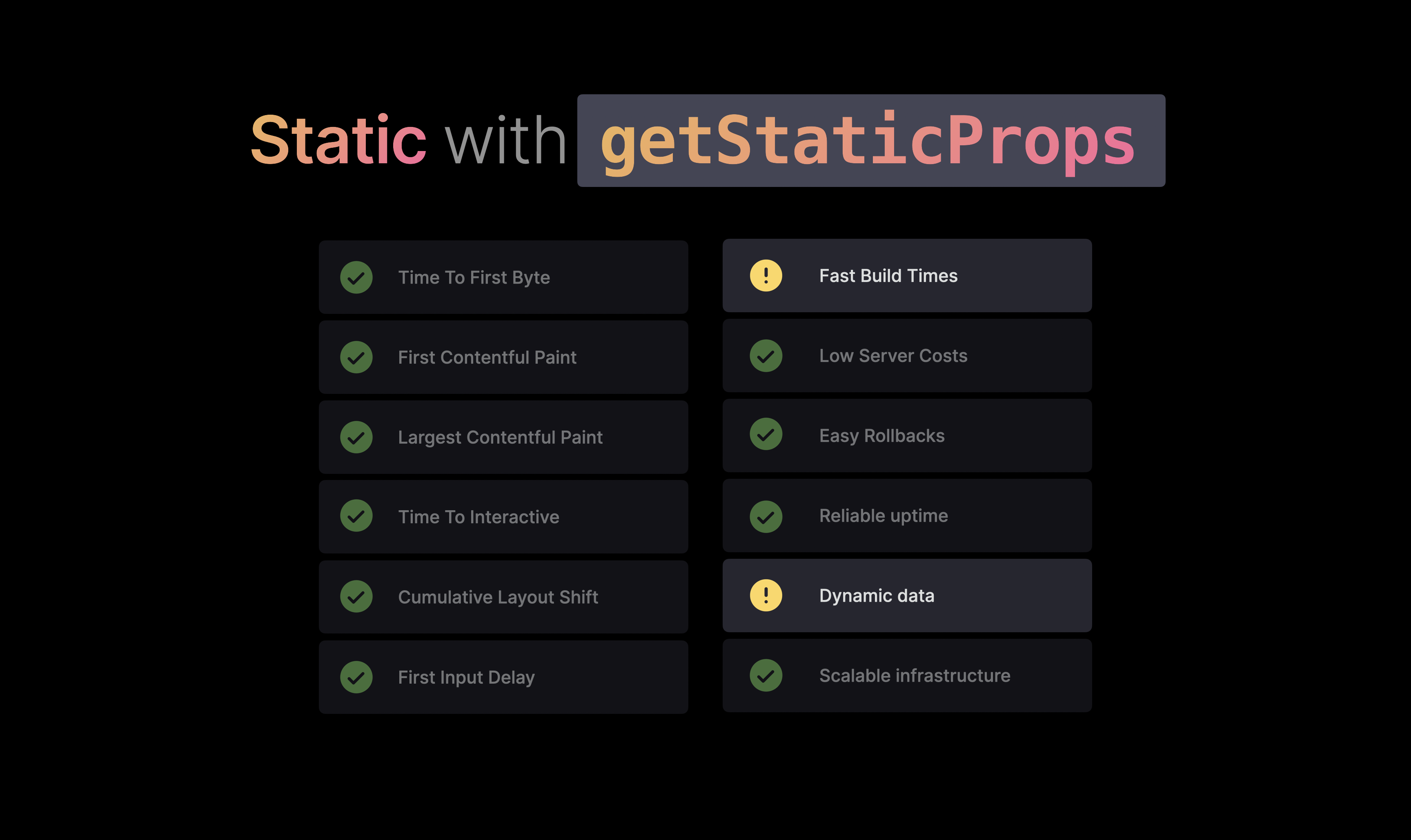

When you use Next.js, you can actually choose between a few more static approaches that really improve the performance of your app when working with dynamic data.

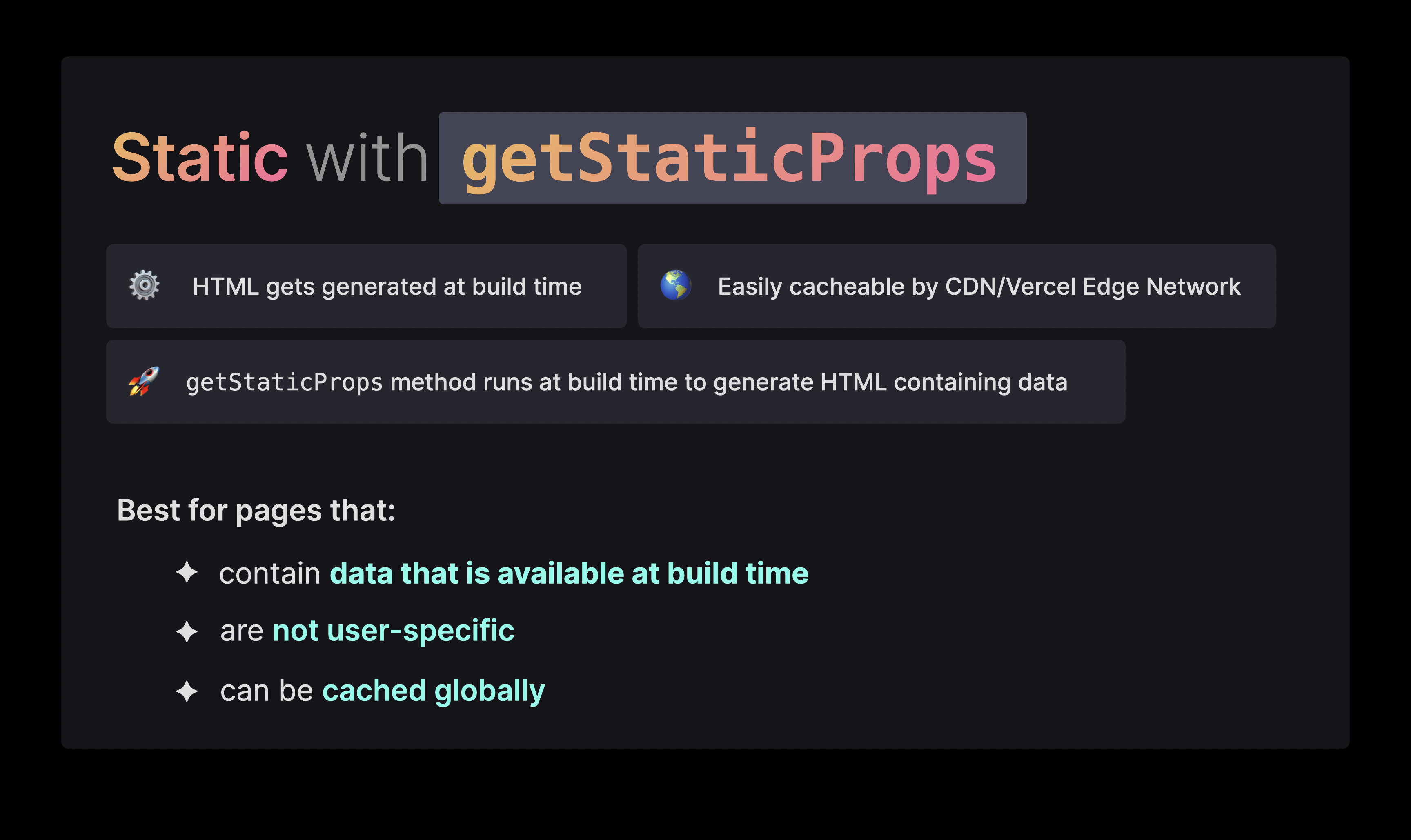

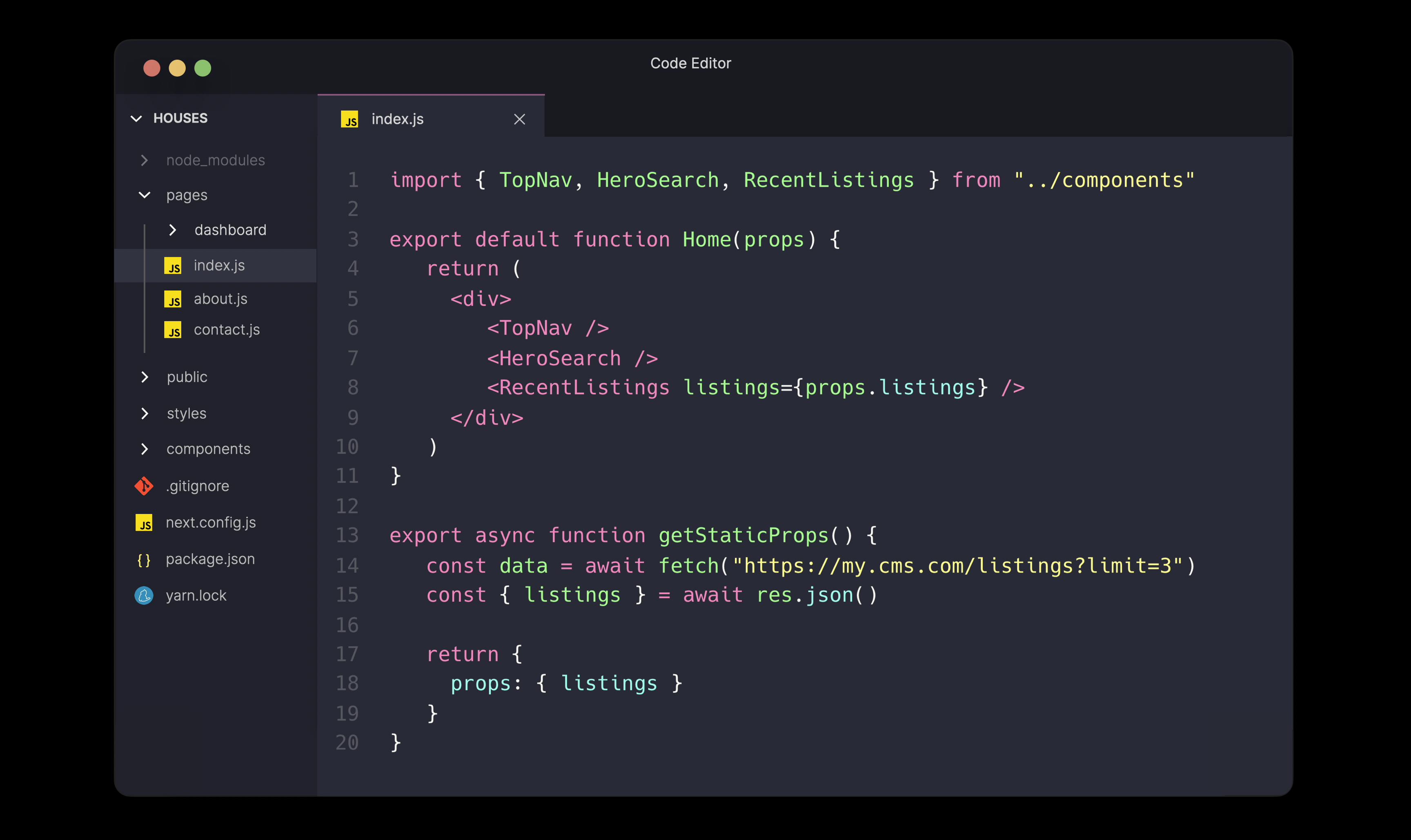

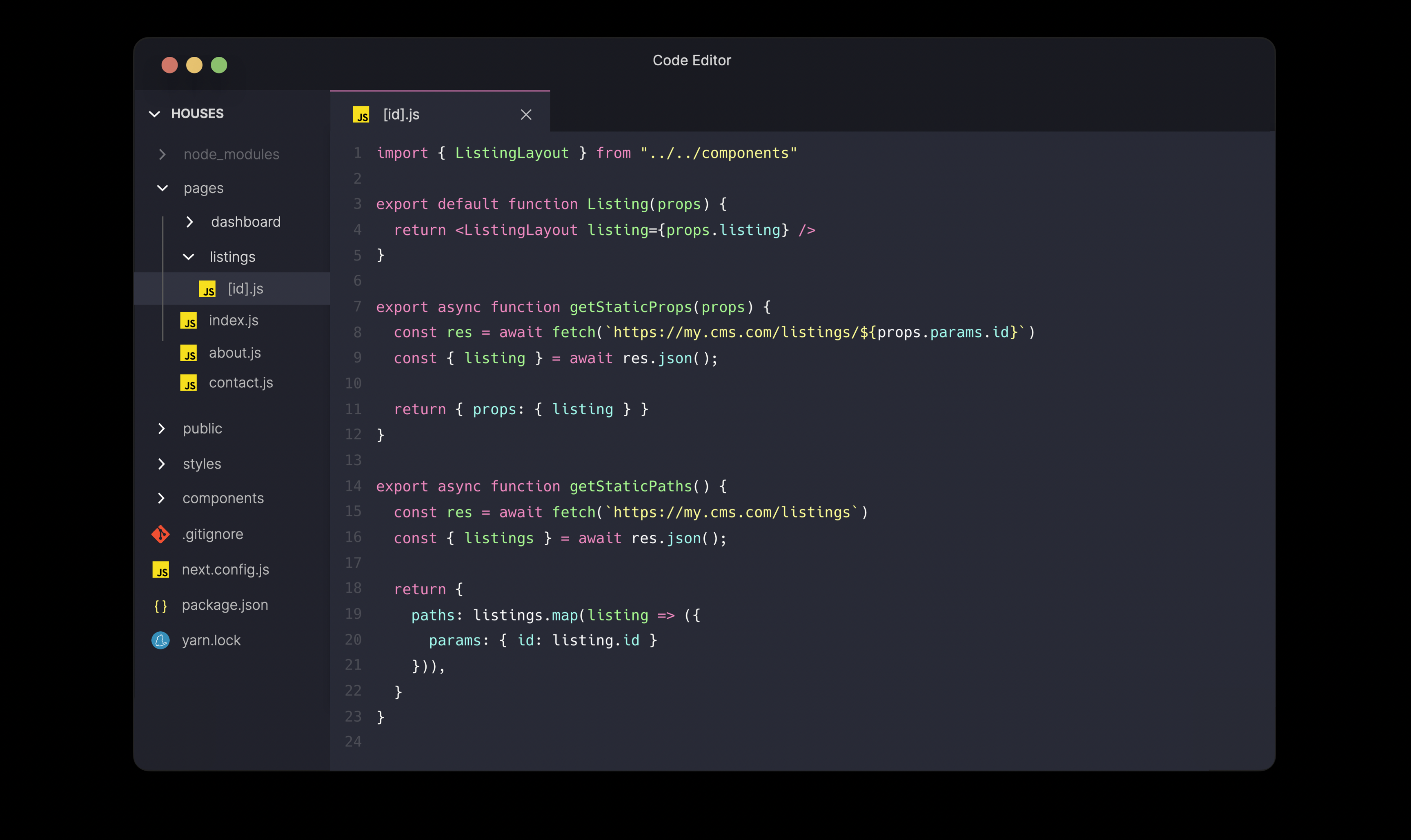

The first approach, is using Static with getStaticProps. This method runs server-side at build time, meaning that we can directly call our data provider within this method. If your data is available at build time, this can be a really good solution to add dynamic data to a static page.

Since the getStaticProps method runs server-side, we no longer have to deal with custom API Routes to fetch the data from, we can do this directly within the method.

We also no longer have to show a skeleton component, as there will never be a loading state; the generated HTML already contains the fetched data.

When we build the project, the data provider gets called, and the returned data gets passed to the generated HTML.

Now when we request the page, we again fetch the HTML from the server. The response gets cached, rendered to the screen, and the browser again sends a request to fetch the JS bundles in order to hydrate the elements.

The network and main thread work are identical to what we saw before when we just statically rendered the content without injecting any data at build time, so from a user perspective, we again get a great performance.

However, as a developer, you can quickly run into some issues.

Imagine if we statically built hundreds of pages, which can easily happen on a blog or ecommerce website, and we’re calling the getStaticProps method for all these pages. This can result in really long build times, and if you’re using an external API, you can even hit the request limit pretty fast, or get charged a lot of money if they charge per request.

Another issue is that we’re only renewing the data at build time, meaning we’d have to redeploy the website to update the content.

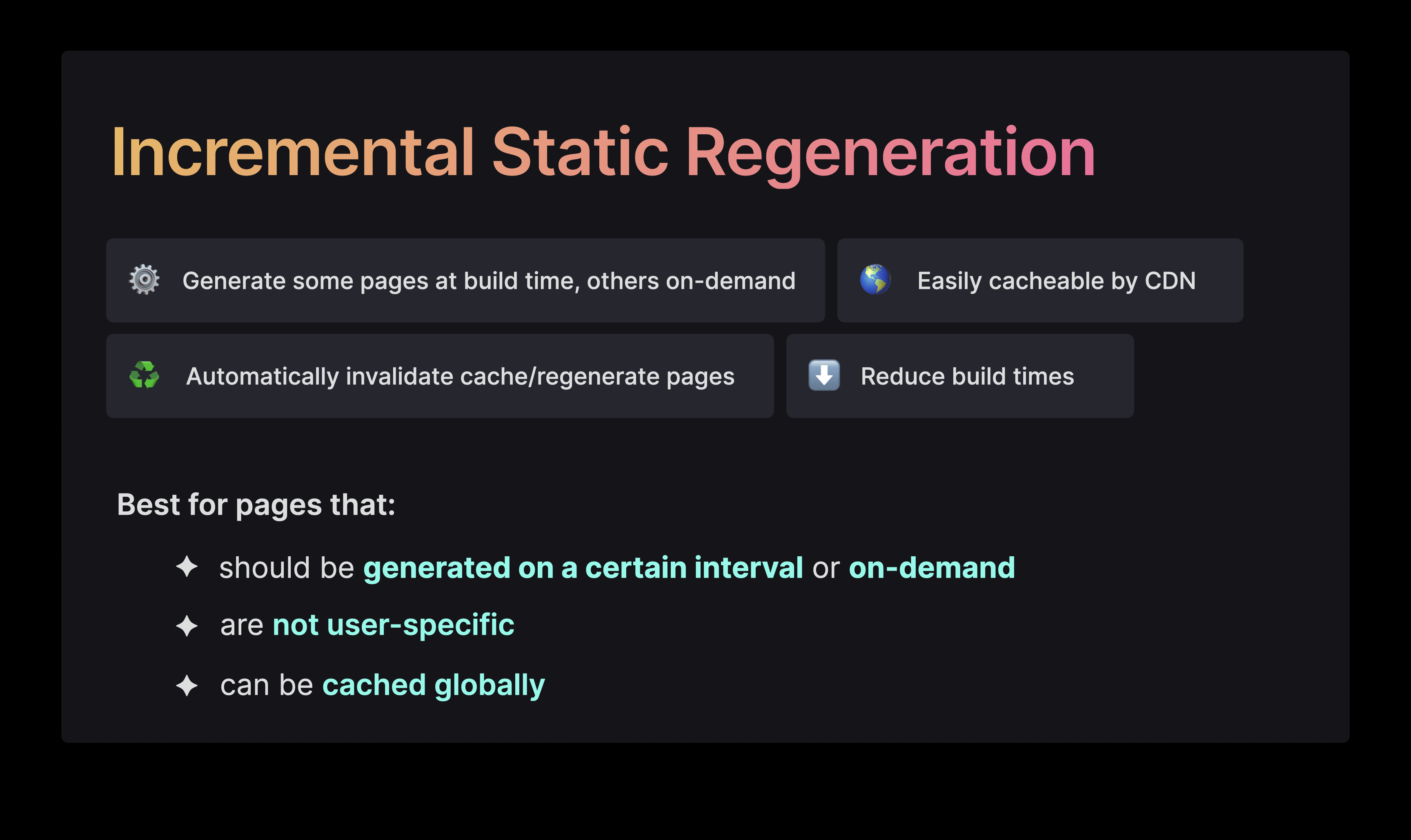

Luckily, we can use Incremental Static Regeneration to solve our build time and dynamic data issues.

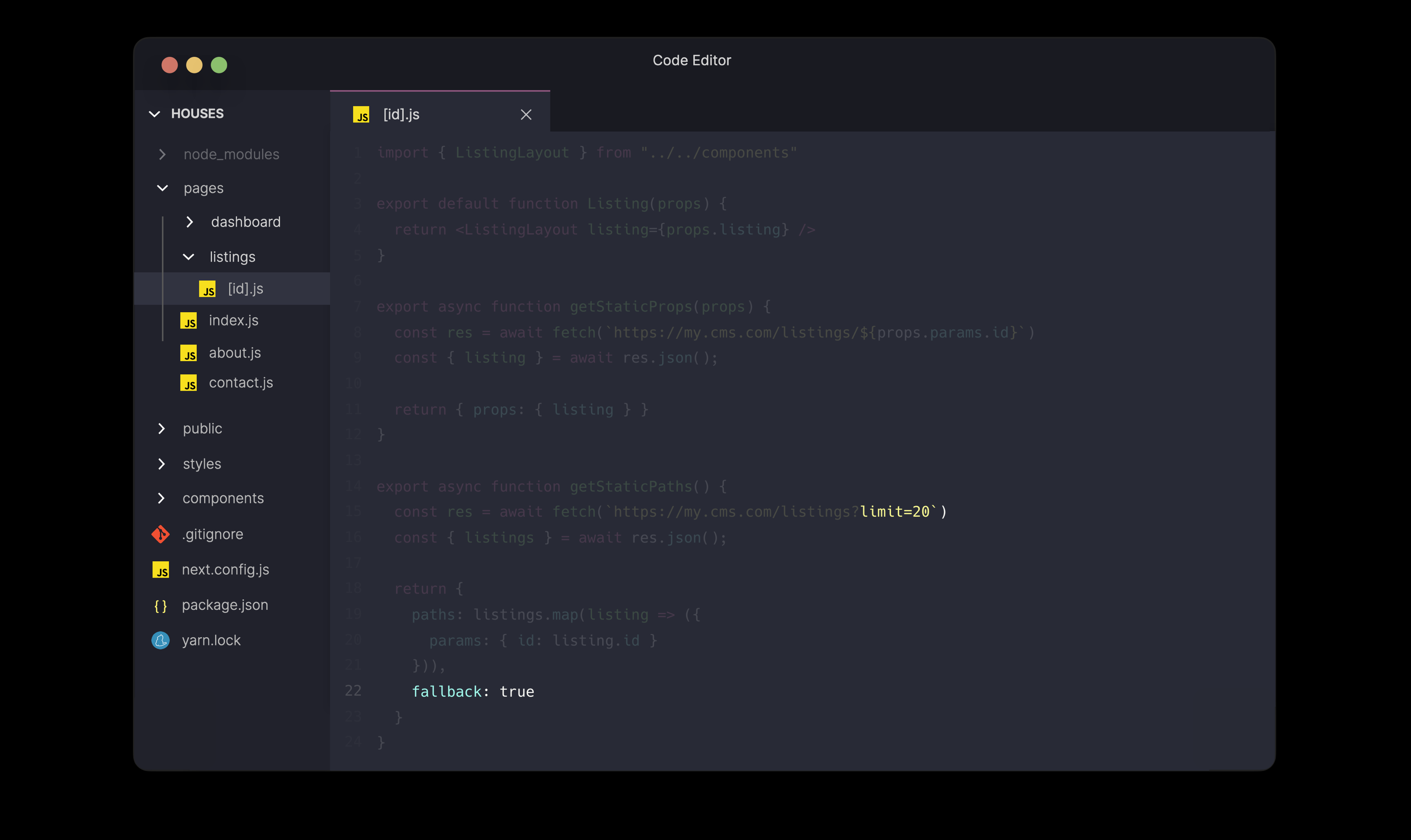

With ISR, we can decide to only pre-render certain pages, and render the other pages on-demand when the user requests them, resulting in much shorter build times. It also allows us to automatically invalidate the cache and regenerate the page after a certain interval.

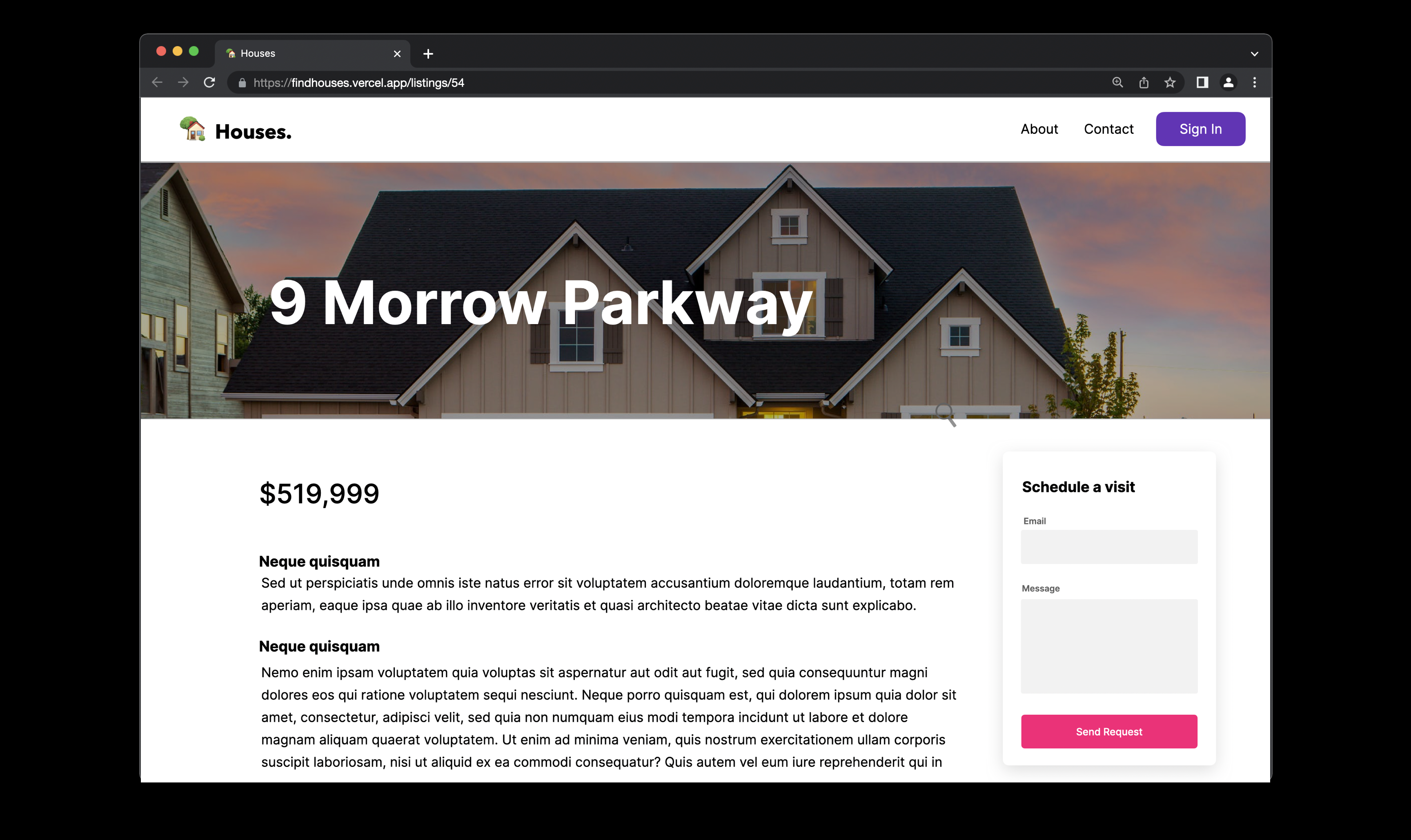

Imagine if we wanted to be able to show individual listings as well, and pre-render these pages to get great performance when a user clicks on a listing.

Using Next.js, we can have dynamic paths with the combination of getStaticPaths to tell Next.js which pages to pre-generate, based on their query parameter.

In this case, we're fetching all listings and pre-generating the pages for each and every one of them. If we had thousands of listings, this would take a really long time to finish.

Instead, we can tell Next to only pre-generate a subset of all the pages, and render a fallback while the listing is page generated on-demand.

The pre-rendered pages behave the exact same. If a user requests a page that hasn't been generated yet, it gets generated on-demand, after which it automatically gets cached by the edge. That means that only the first user might have a worse experience as the page still needs to be generated, but everyone else can benefit from getting a fast, cached response.

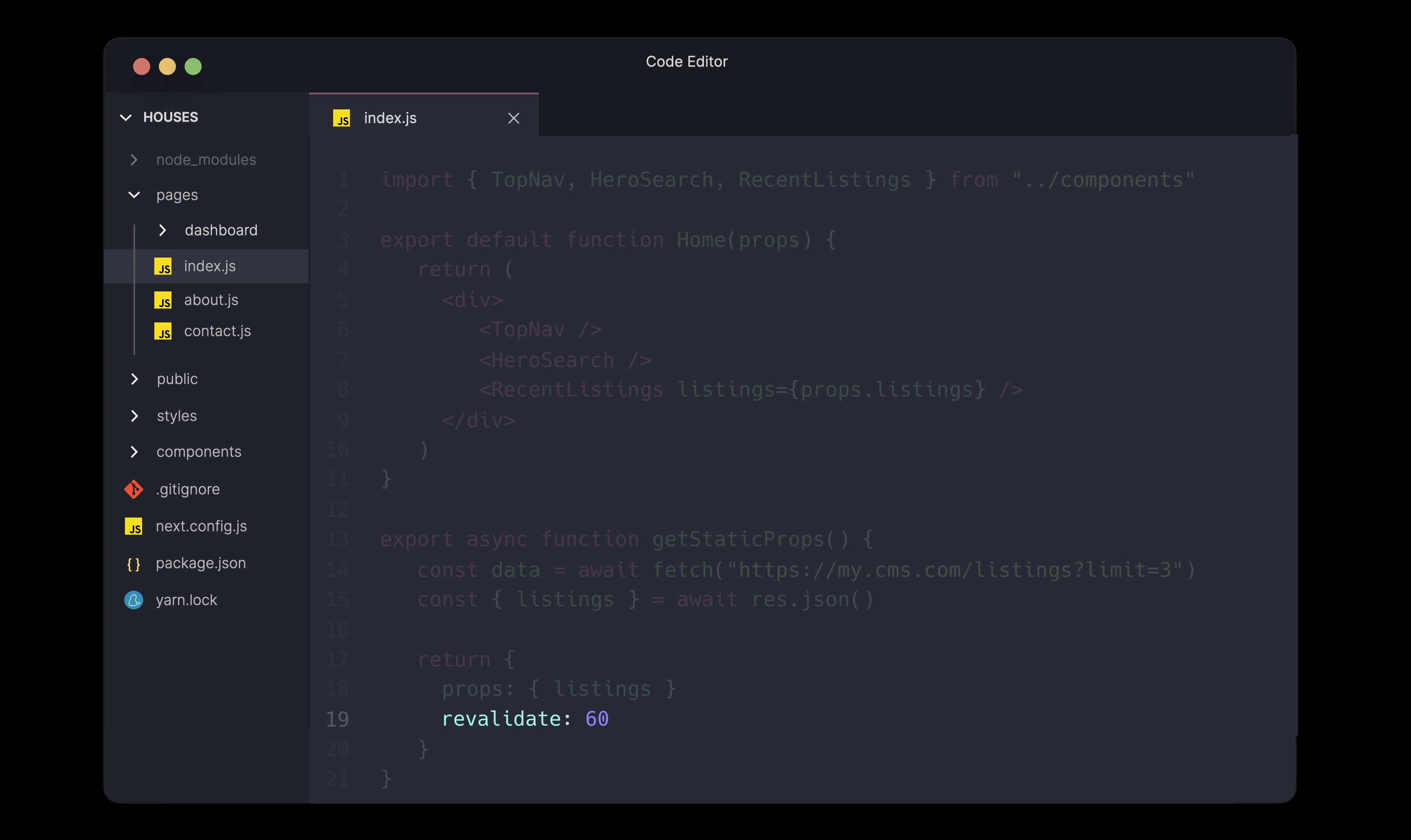

Our long build times have been solved, but we still don’t want to have to redeploy the landing page every time to renew the data with the latest listings.

Instead of only renewing the data at build time, we can automatically invalidate the cache and regenerate the page in the background on a certain interval. We can use this by adding a revalidate field to the returned object.

Now when a user requests a stale page, meaning a page that has been cached for longer than the specified number of seconds, the user gets to see a stale page at first. In the background, a page regeneration is triggered while the user gets to see the stale page. Once the page has been regenerated in the background, the cache gets invalidated and updated with the latest page.

When the user requests the page again, for example by refreshing it, they’ll see the updated content.

With Incremental Static Regeneration, we can show dynamic content by automatically revalidating the page every number of seconds.

Although this is already a huge improvement to what we had before, we can run into some issues here. It's likely that our content doesn’t update every number of seconds, which would mean that we’re unnecessarily regenerating the page and invalidating the cache every number of seconds. Every time, we're invoking our serverless functions, which could result in higher server costs.

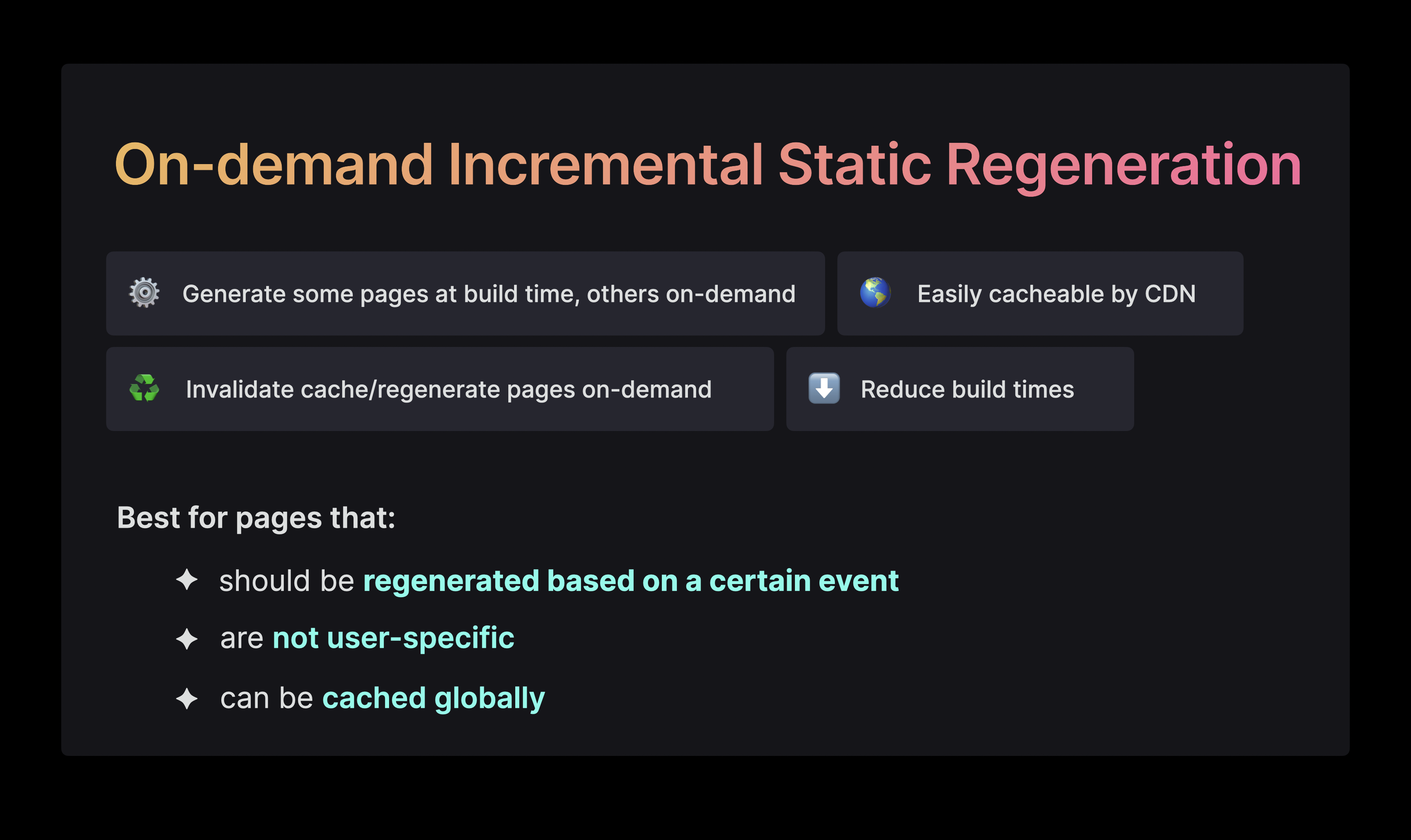

The best option here, is to use On-demand Incremental Static Regeneration.

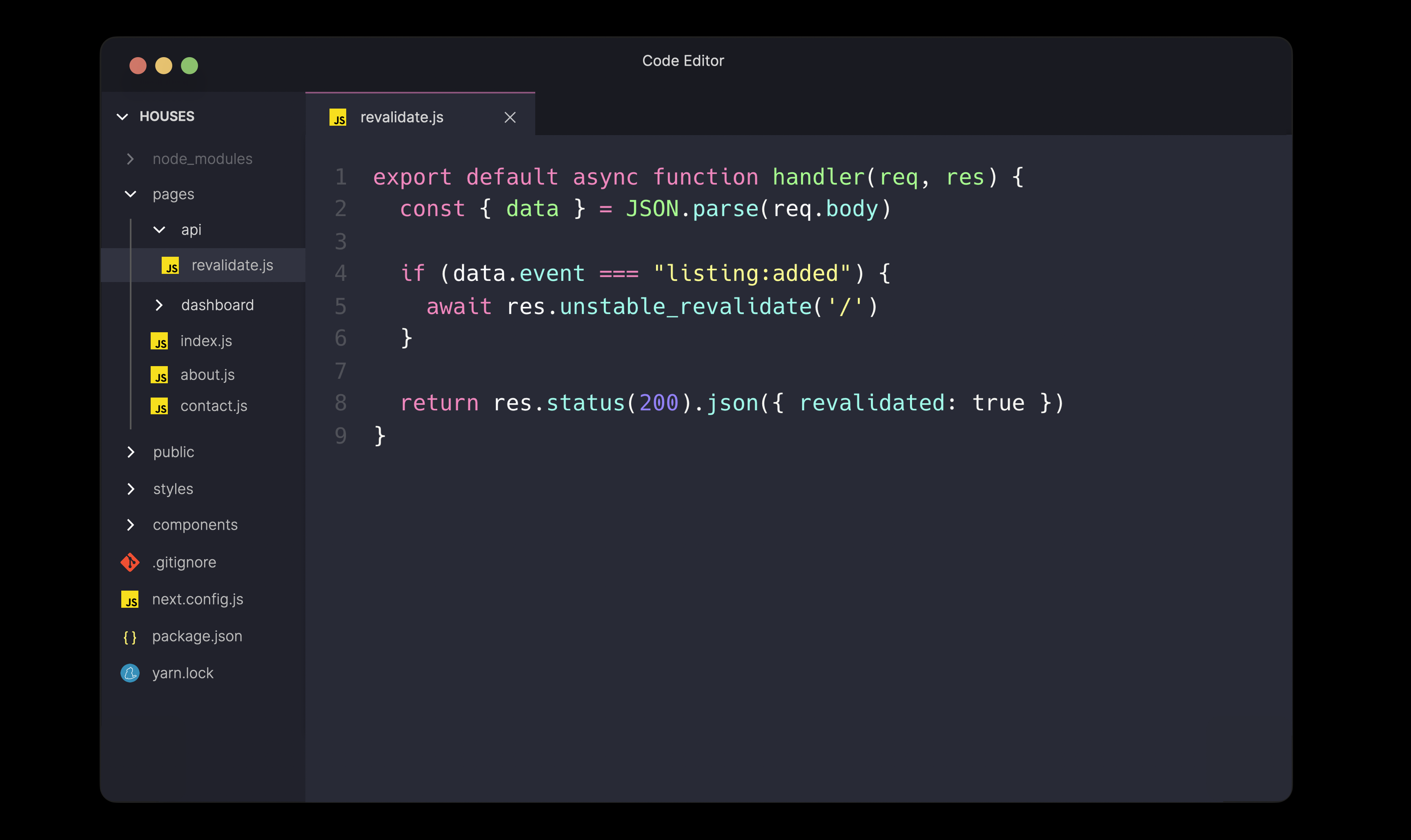

On-demand ISR allows us to use ISR, but instead of regenerating on a certain interval, we can base the regeneration on certain events.

Instead of relying on the revalidate field, we can manually revalidate pages in API routes based on incoming requests.

For example, we can listen to an incoming webhook event that tells us when new data has been added to our data provider. When we invoke the revalidate method, the page on the specified path automatically gets regenerated.

Another huge benefit of using on-demand ISR over regular ISR, is the fact that the newly generated page gets distributed throughout the entire edge network! With regular ISR, the returned response only gets cached in the regions where users sent requests from.

However, with on-demand ISR, users from all over the world will automatically hit the edge cache, without ever seeing stale content. We also don’t have to worry about unnecessarily invoking a serverless function every number of seconds if content hasn’t been updated, making it a much cheaper pattern to use.

On-demand ISR gives us all the performance benefits, combined with a great developer experience. We don't have to worry about high server costs as long as we don't trigger a revalidation too often, and we can bring a great performance to users all over the world in combination with the edge cache.

Static generation is an amazing pattern that can be used for tons of use cases, especially in combination with ISR.

We can have an incredibly fast and dynamic website, that's also always online, since there’s always a cached version available, and we end up with lower costs.

However, there are also tons of use cases where static isn’t the best way to go, for example for highly dynamic, personalized pages that are different for every user.

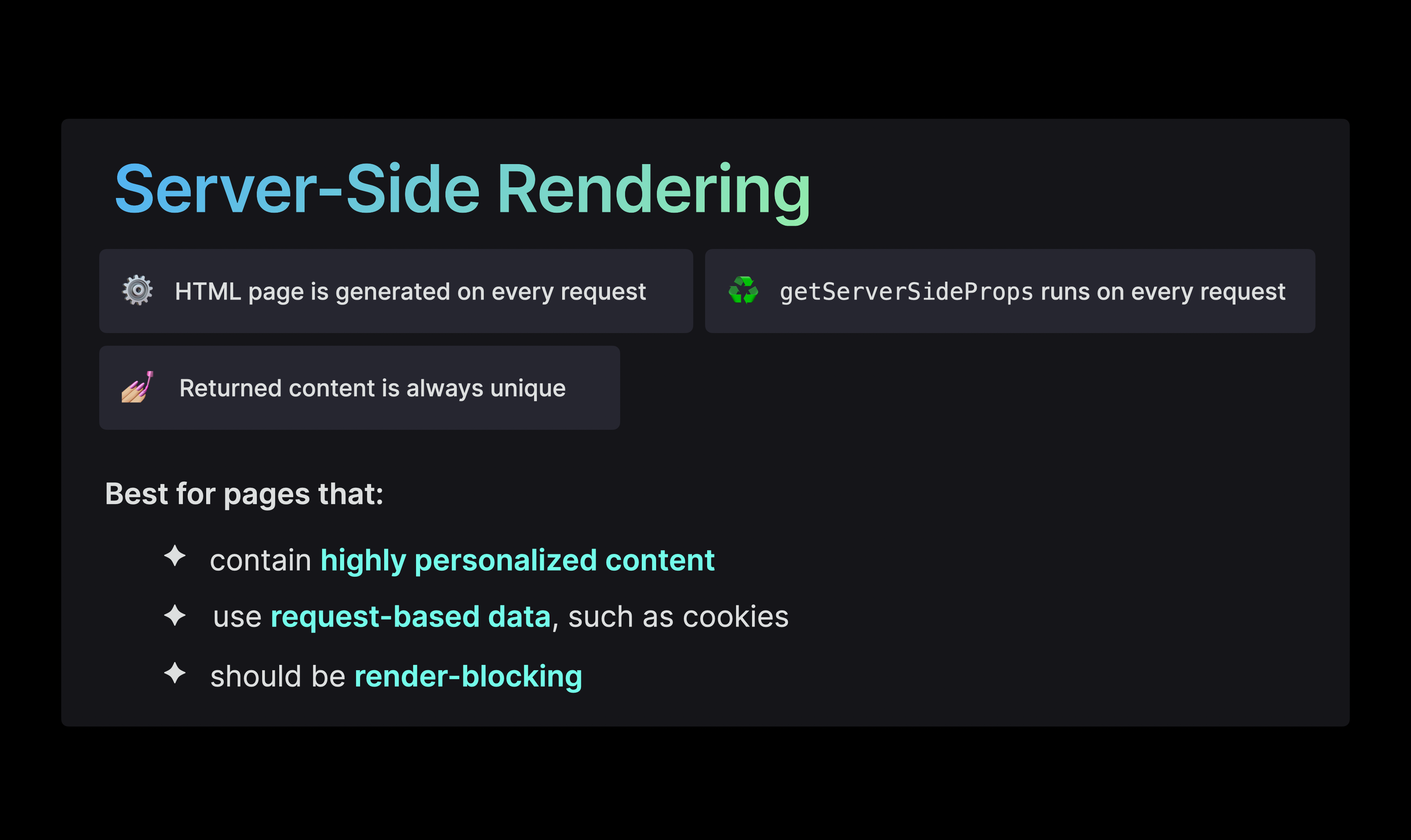

In that case, we might want to use Server-Side Rendering. Whereas we generated the HTML at build time using static generation, with server-side rendering, we generate the HTML on every request.

This can be a great approach for pages that contain highly personalized data, for example data based on the user cookie, or just generally any data that's contained within the user's request. It's also good for pages that should be render-blocking, perhaps based on authentication state.

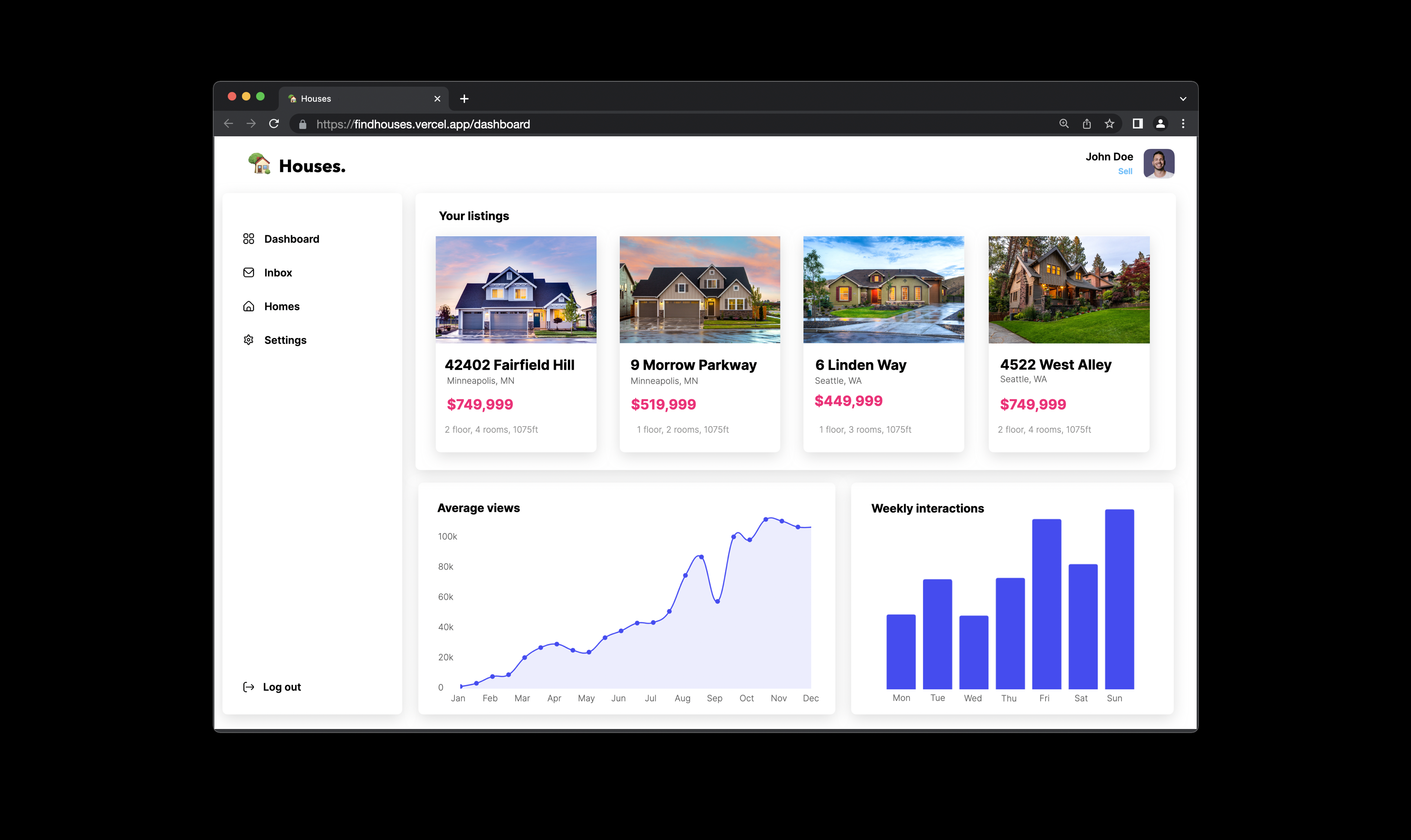

An example of a highly dynamic page is anything that requires a user cookie, is a dashboard. This dashboard is only shown when a user is authenticated, and shows mainly user-specific data.

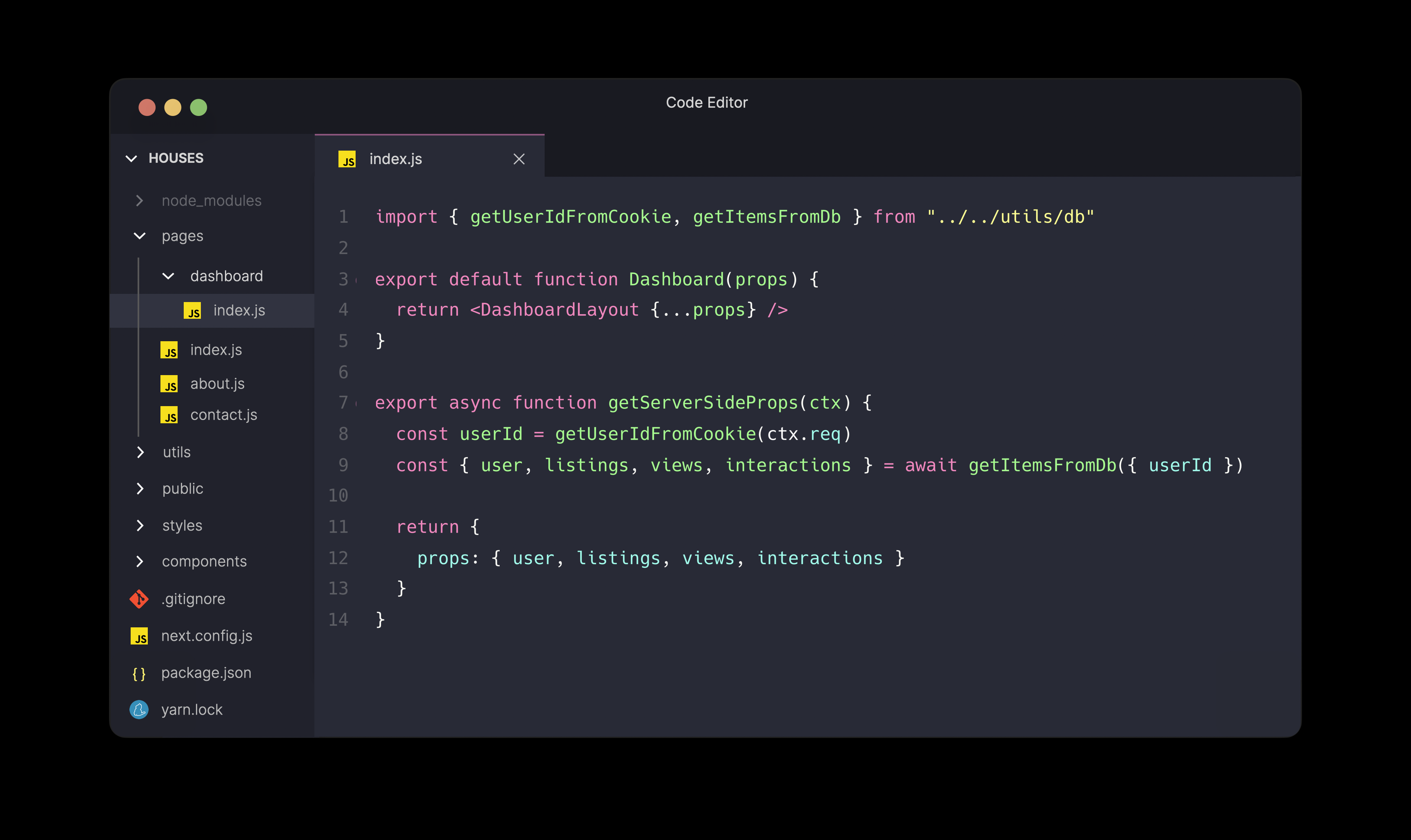

With Next.js, we can server-render a page using the getServerSideProps method. This method runs server-side on every request, and eventually passes the returned data to the page in order to generate the HTML.

When a user requests the page, the getServerSideProps method runs, after which the page gets generated, and sent to the client. The client can quickly render this HTML, and can send an another request to fetch the JavaScript bundle to hydrate the elements.

The generated pages are unique to every request, meaning they aren’t automatically cached by our CDN. Although this is the expected behavior, there’s more to take into consideration if you want to get a good performance.

When we look at the network and main thread, it looks pretty similar to what we initially saw with static rendering. The FCP is basically equal to the LCP, and we can easily avoid layout shift, as we're not dynamically rendering content.

However, when we compare static rendering an server rendering, you can see that the TTFB can be very short when we statically render data since it's already pre-generated. If a page is server-rendered however, the TTFB can be a lot longer, as it still needs to be generated.

Although server-rendering is a great method when you want to render highly personalized data, there are some things to take into consideration to achieve a great user experience.

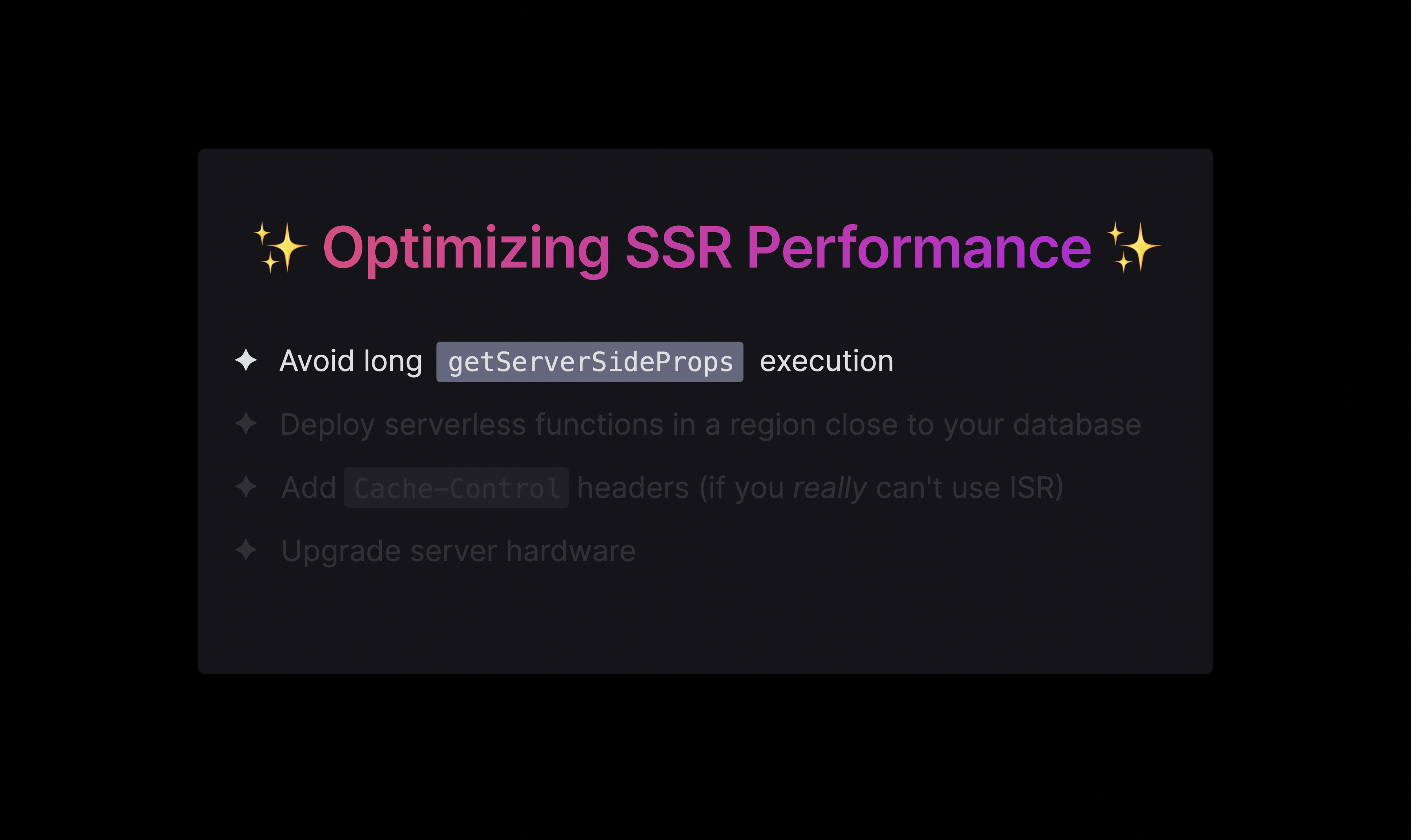

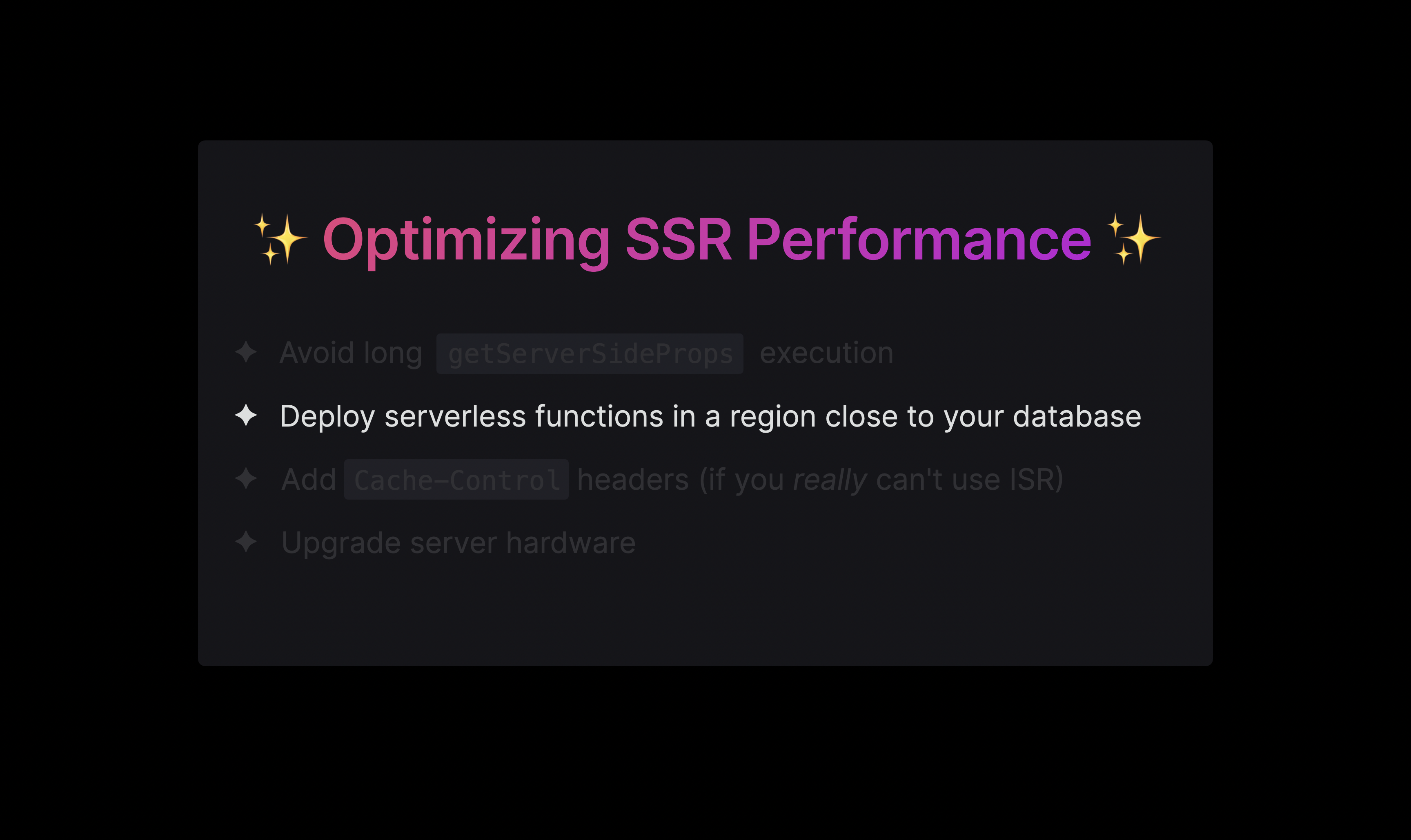

We might also run into way higher server costs, which in some cases is completely worth it, but there are a few things you can do as a user to optimize your SSR performance!

First, we have to make sure that the getServerSideProps method doesn't run too long. The page generation won't start until this method has resolved with data that gets passed to the page.

One thing to keep in mind (and that's often the cause of a long getServerSideProps execution), is the time it takes to query data from your database.

If your serverless function is deployed in San Francisco, but your database is deployed in Tokyo, it can take a while to establish a connection and get the data. Instead, consider moving your database to the same region as your serverless function to ensure your database queries can be a lot faster.

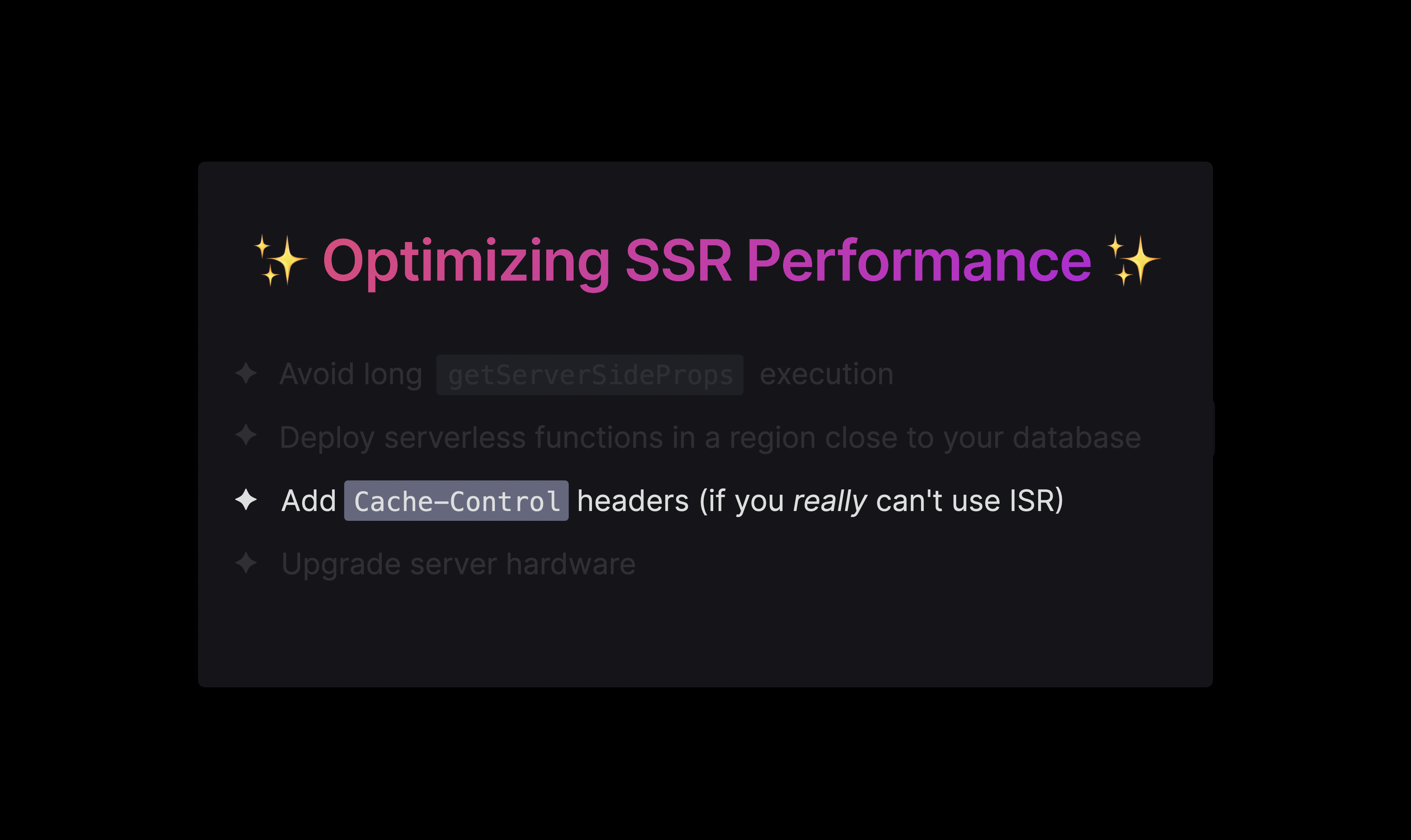

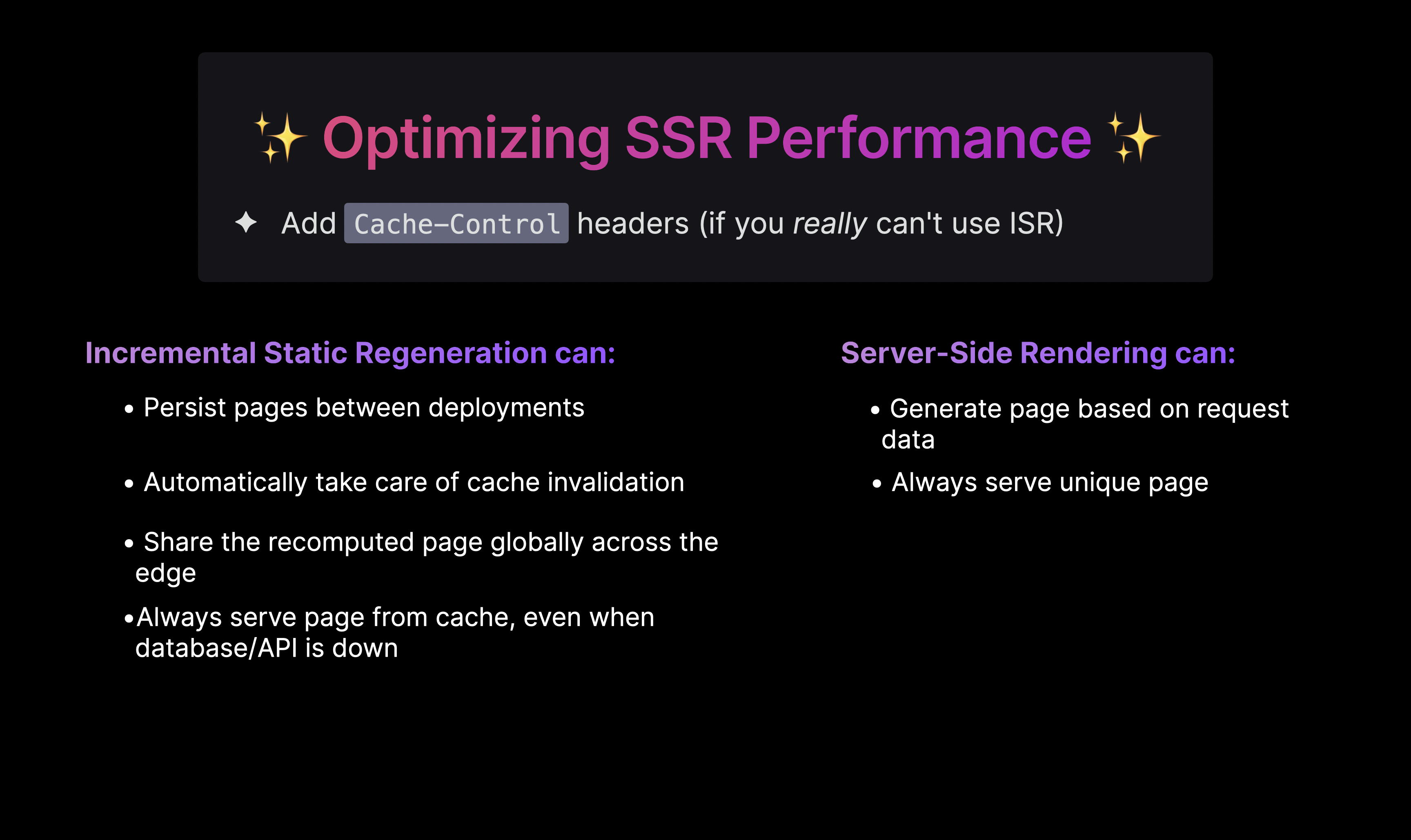

Next, you might improve your performance a bit by adding Cache-Control headers to the responses.

However, if you find yourself doing that, your page might work even better if you used (On-demand) Incremental Static Regeneration. Besides not having to manually take care of revalidation, the recomputed page will also be shared globally as opposed to one single region. (On-demand) Incremental Static Regeneration also ensures that your website is always online since there will always be a cached version available, whereas Server-Side Rendering is dependent on the availability of the lambda. If a region goes down, so does your website.

Since ISR does not allow you to use request-based data, SSR is still definitely the way to go if your page is using this.

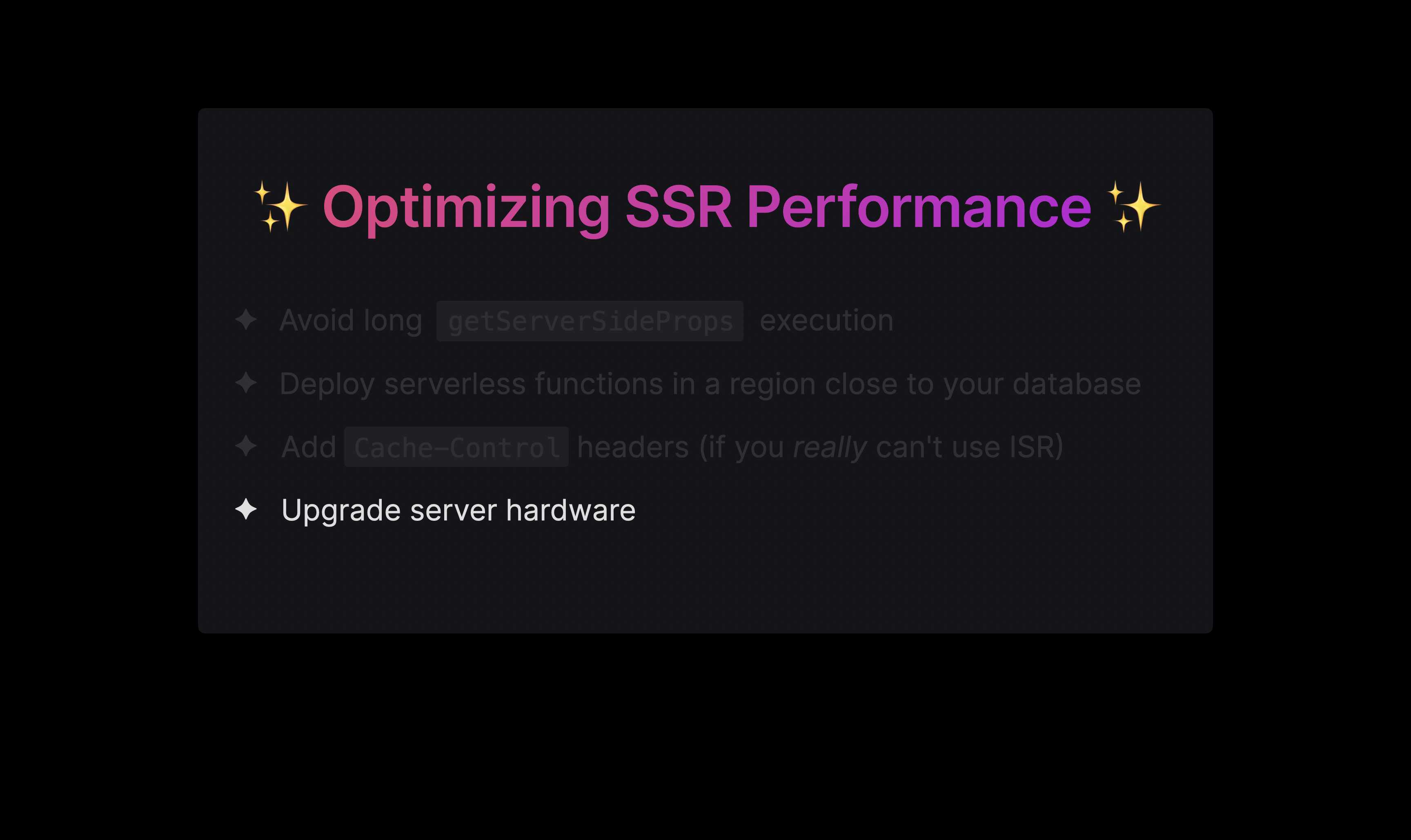

Next is something that is not as easy to do, namely upgrading the server hardware to get faster responses.

When you deploy to Vercel, we use serverless functions to server-render your pages.

Although serverless functions come with many benefits, such as only having to pay for what you use, there are a few limitations. A common issue is the long cold boot, meaning the time it takes to start up the lambda, and slow connections to databases. It’s also not great to call a serverless function all the way on the west coast if you’re located on the other side of the planet.

We're currently exploring Edge Server-Side Rendering, which enables users to server-render from all regions, and experience a near-zero cold boot. Another huge benefit of Edge SSR, is the fact that the edge runtime also allows for HTTP streaming.

With serverless functions, we had to generate the entire page server-side, and wait for the entire bundle to be loaded and parsed on the client before hydration could begin.

With Edge SSR, we can stream parts of the document as soon as they're ready, and hydrate these components granularly. This means that users no longer have to wait for a longer time before they can see anything on the screen, as the components stream in one by one.

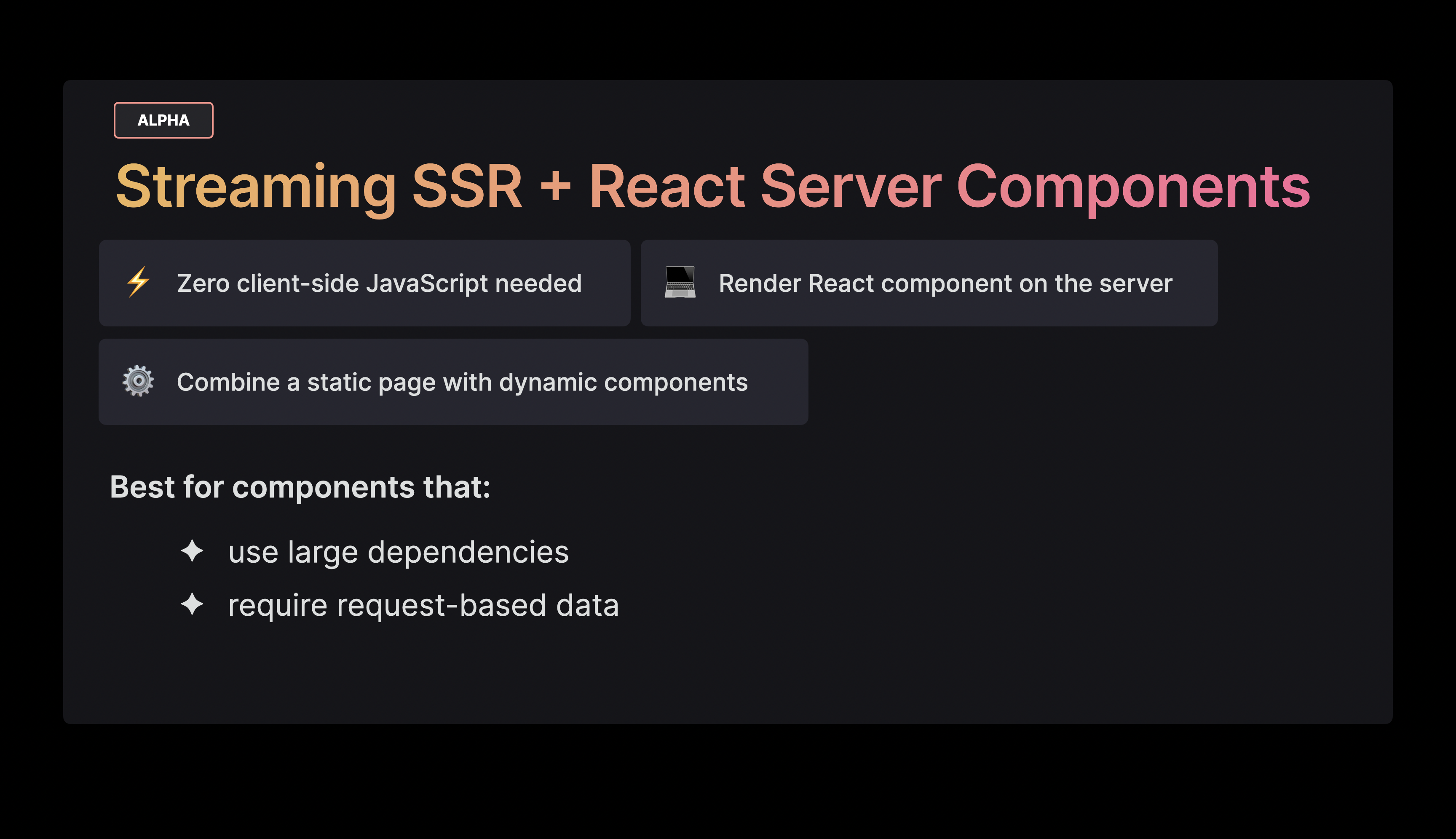

Streaming SSR also enables React Server Components. Now I'm not going into the nitty gritty details of React Server Components, but the combination of Edge SSR with React Server Components can allow us to have a beautiful hybrid between static and server rendering.

React Server Components allow us to partially render React components on the server, which is especially useful for components that require large dependencies, as we no longer have to download these dependencies on the client.

For example if we wanted to show the landing page again, but this time we wanted to show region-specific listings to the user. The vast majority of the page only contains static data, it's just the listings that require request-based data.

Instead of having to server-render the entire page, we can now choose to only render the listings component server-side, and the rest client-side. Whereas we initially had to server-render the entire page to achieve this behavior, we can now get the great performance of Static Rendering , with the dynamic benefits of Server-Side Rendering.

Although we've just covered many patterns to choose from, knowing which pattern makes the most sense for your use case can give your application huge performance benefits. On Vercel, you can opt into these techniques on a per-page basis, making it extremely easy to scale without running into performance issues when your application grows.

Static Rendering and Server Rendering both have their place in the world, and we're working on a future where we we can create highly personalized websites with great performance. The web is extremely powerful, and it’s only getting better, especially with an Edge-first approach to look forward to!